IrisCTF 2025!

I participated in IrisCTF 2025, organized by IrisSec, as a member of the InfoSecIITR team. Our team secured an impressive 9th place globally. These writeups detail all the OSINT and Forensics challenges that I personally solved during the competition. I won the Writeup Prize for the Tracem2 Challenge ;)

OSINT

OSINT Disclaimer

Challenge Description

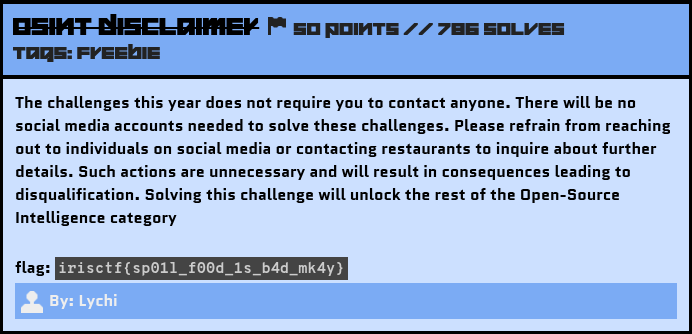

OSINT DISCLAIMER 50 points // 786 solves

Tags: freebie

The challenges this year does not require you to contact anyone. There will be no social media accounts needed to solve these challenges. Please refrain from reaching out to individuals on social media or contacting restaurants to inquire about further details. Such actions are unnecessary and will result in consequences leading to disqualification. Solving this challenge will unlock the rest of the Open-Source Intelligence category

By: Lychi

Solution

The flag for this challenge was included in the challenge description.

This time, the OSINT challenges were purely based on the website blog and some images. Thanks to Lychi and Bobby for creating these amazing OSINT challenges. I managed to solve all of them.

Flag:

1 | irisctf{sp01l_f00d_1s_b4d_mk4y} |

Checking Out Of Winter

Challenge Description

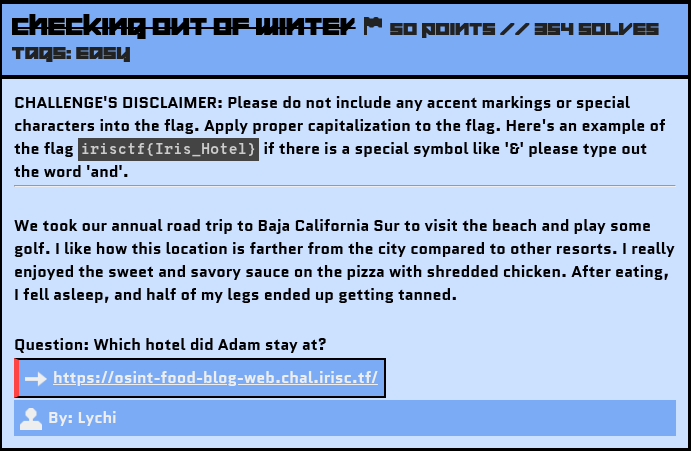

Blog’s Link: https://osint-food-blog-web.chal.irisc.tf/

Message

For beginners, I would say that doing OSINT challenges is really fun and makes you feel like an investigator. You just have to narrow down the useful data from all the information available to you. Always check for keywords, regional distinctions, special marks, or buildings, as the victim often leaves minor traces by mistake, which can help you identify them.

I created this writeup in detail, especially for first-time solvers. 😉

Solution

In the challenge description, keywords like Baja California Sur, Beach, Golf, and Hotel’s location farther from the main city are traces left by the victim that can help us move forward.

Exploring the Clues

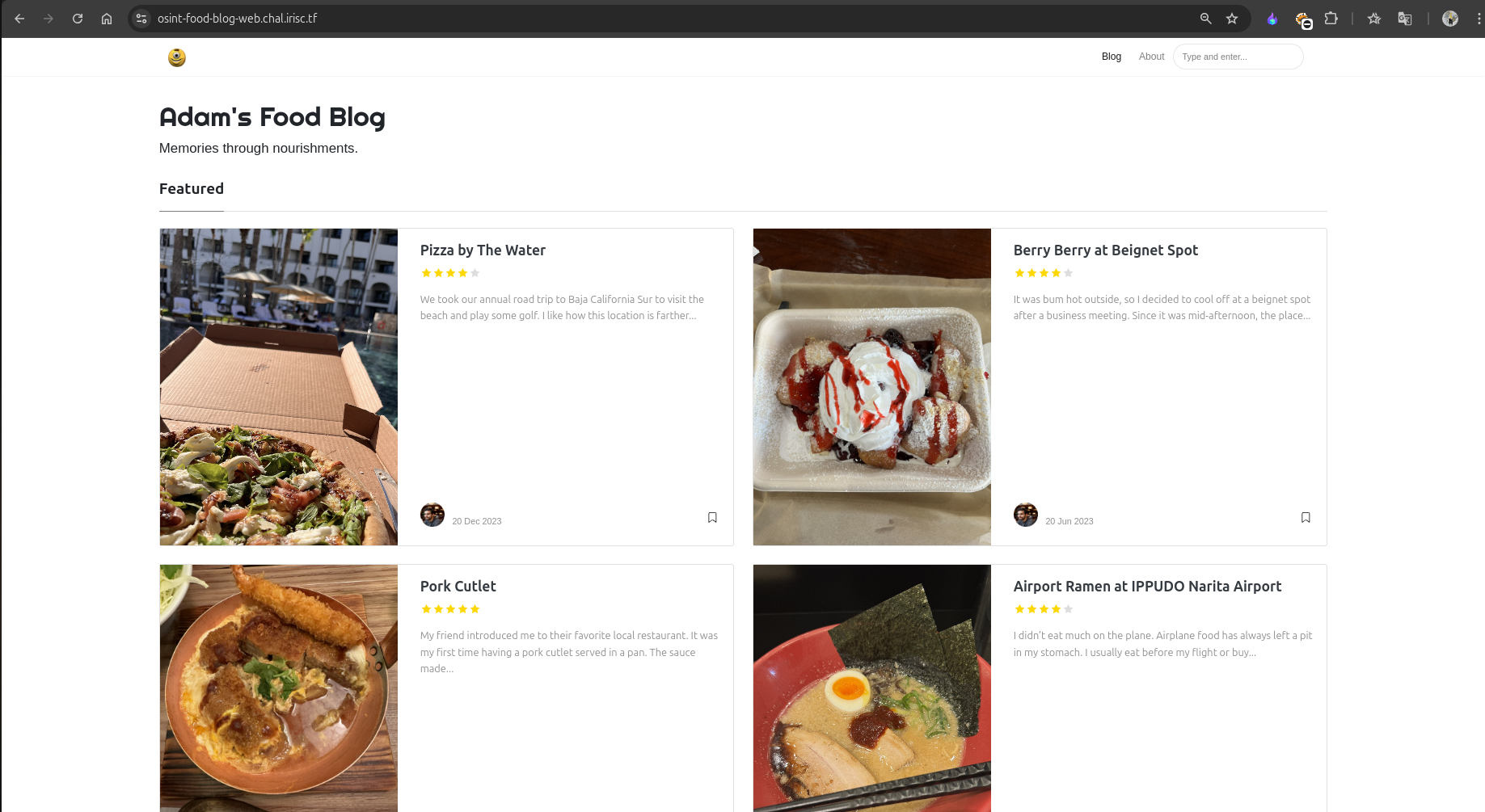

Let’s visit the website:

On the website, we find many blogs and stories by Adam about the places he visited and the food he ate.

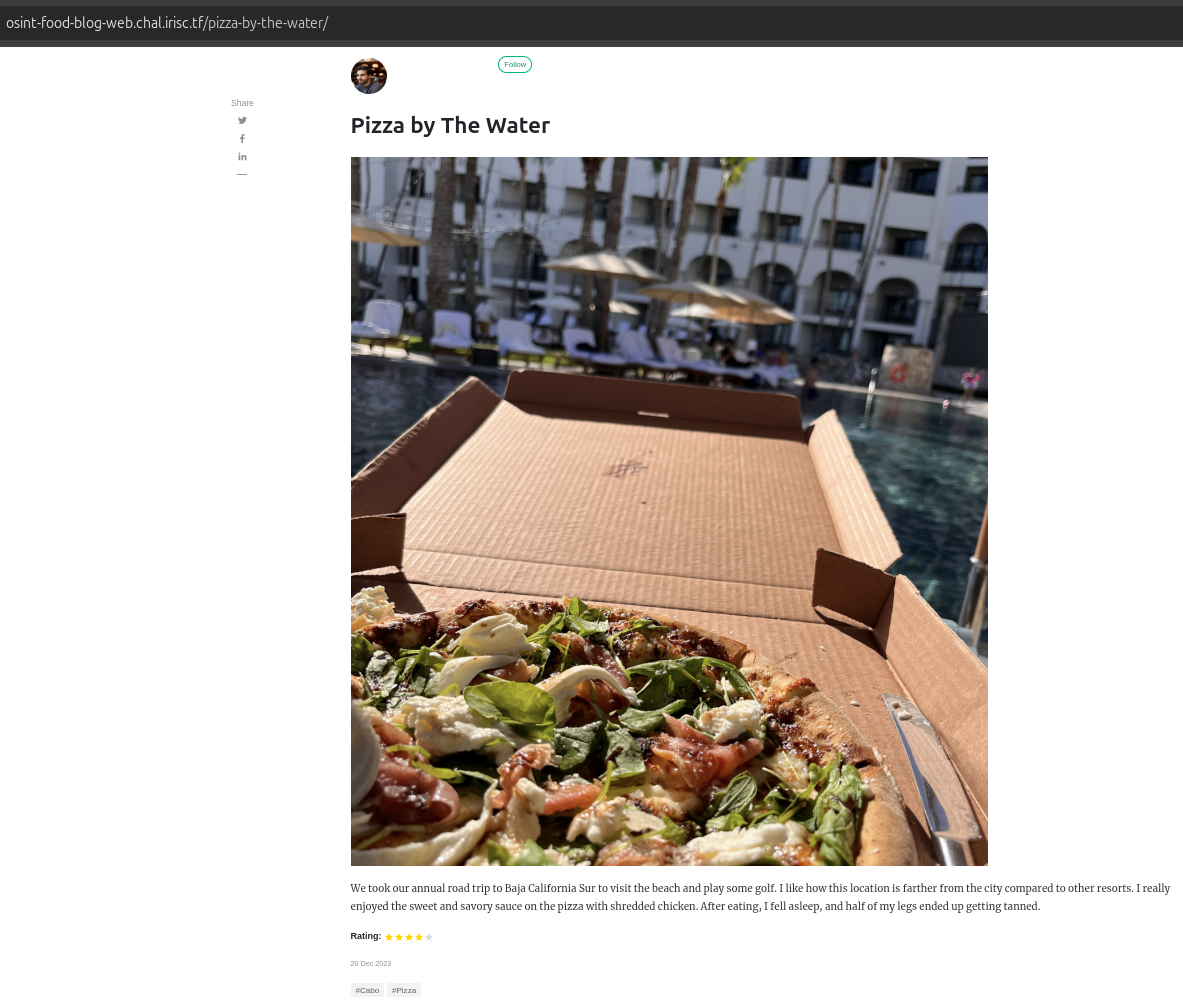

By matching the description, we identify that the Pizza by The Water blog is the most relevant one for this challenge.

In the blog’s image, we notice the hotel’s architecture in the background, along with a pool. Since we have an image related to the location, we can perform a Reverse Image Search using Google Lens.

1 | Note: If the location is based in Russia, Yandex is preferable for reverse image searches. Similarly, if a country has its own powerful search engine, use that for better results. |

Initially, when I searched using the full image (including the pizza and the hotel), most results were about pizzas, related sites, and posts.

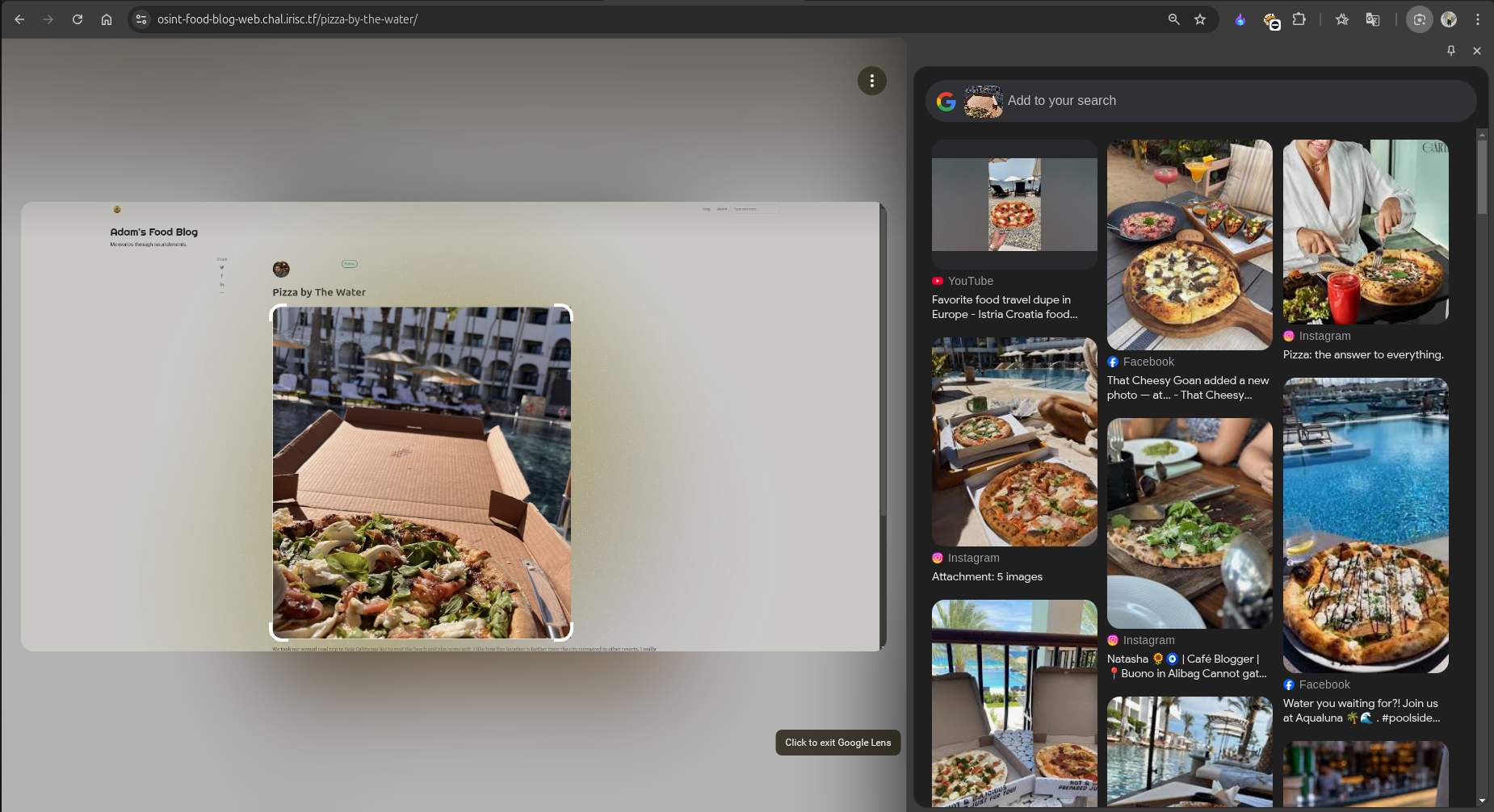

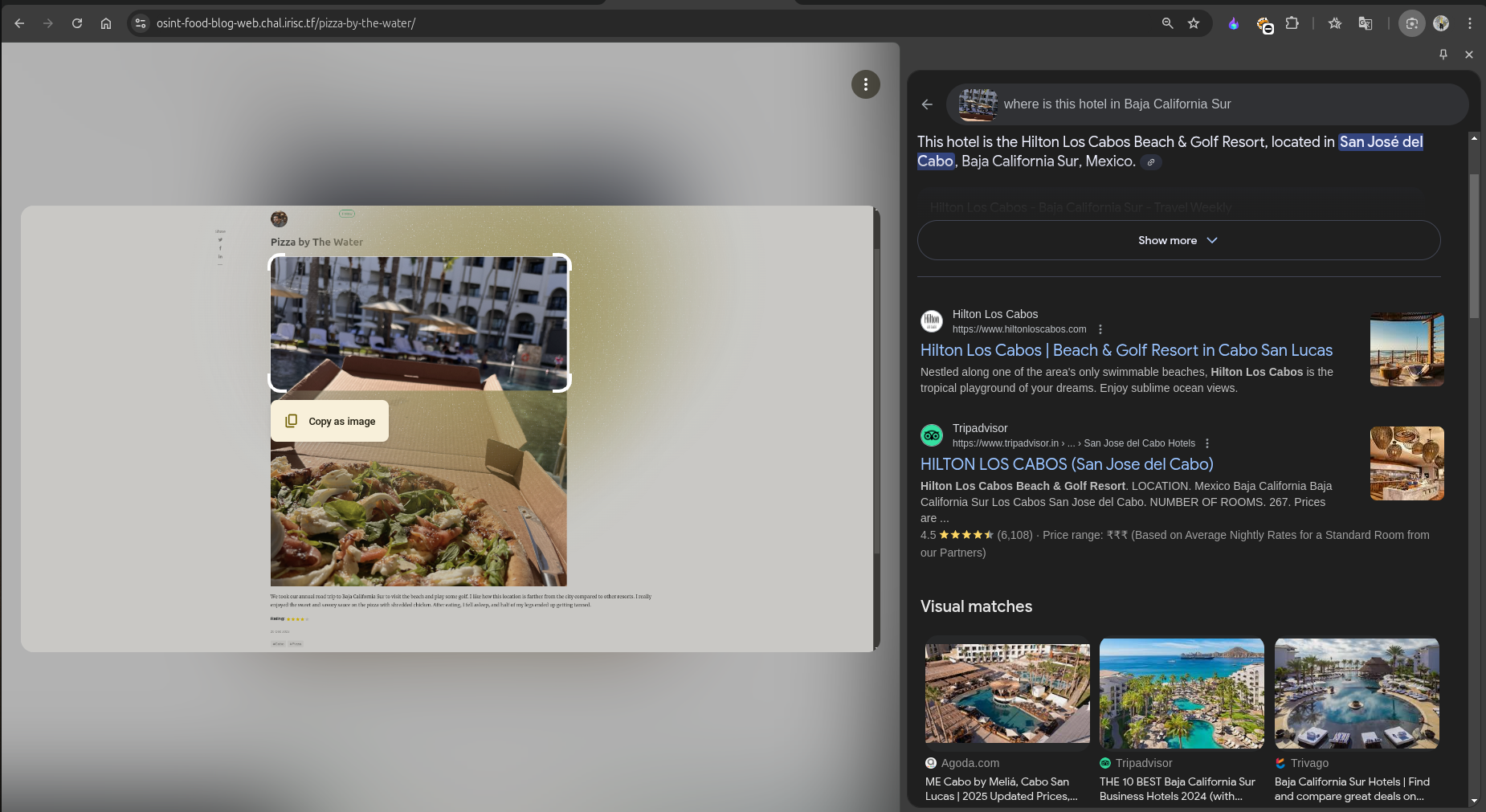

To refine the search, we need to narrow down the content we are focusing on. For the next search, I used only the hotel’s image and included the location Baja California Sur. This helped narrow down the results significantly.

In the refined search results, as seen above, the AI and other sites directly suggested the hotel’s name, unlike the first search. This demonstrates the importance of crafting an effective search prompt, as search engines have vast amounts of data to process.

Creating the Flag

With the gathered information, let’s construct the flag using the defined format.

Flag:

1 | irisctf{Hilton_Los_Cabos_Beach_and_Golf_Resort} |

Hurray!!!

Sleuths and Sweets

Challenge Description

Blog’s Link: https://osint-food-blog-web.chal.irisc.tf/

Solution

If you came directly to this challenge’s writeup, I will recommend you first read my Checking Out Of Winter writeup because I have made it in detail, specifying some important notes.

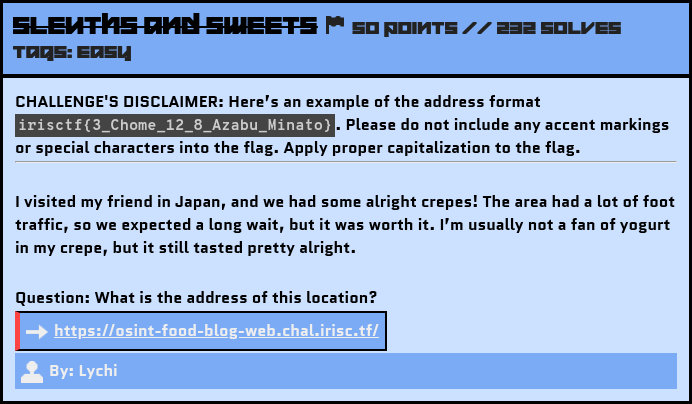

So again, let’s search for the keywords. I got these: Japan, Crepes, Foot Traffic—traces left by the victim that can help us move forward.

Let’s visit the website for this blog:

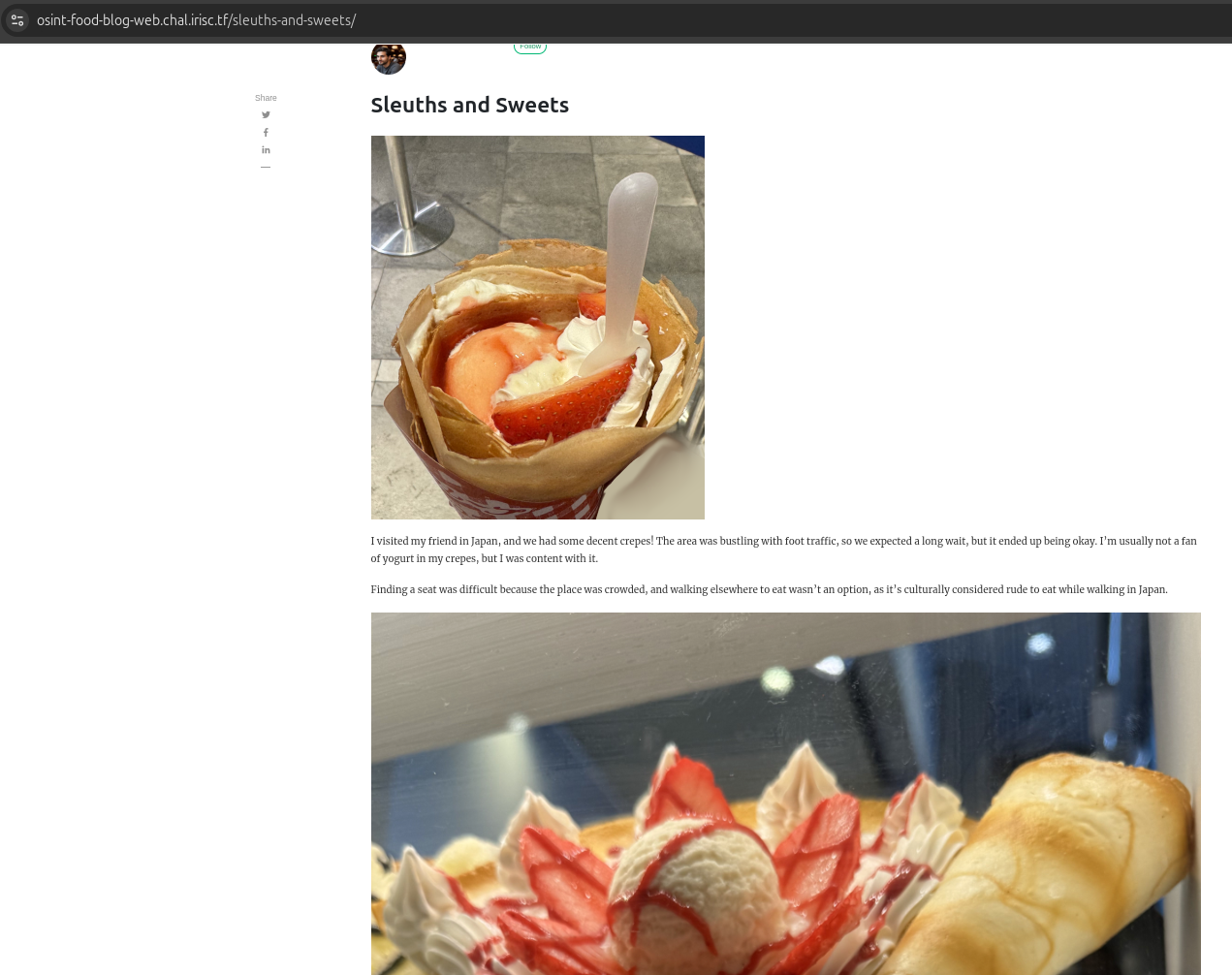

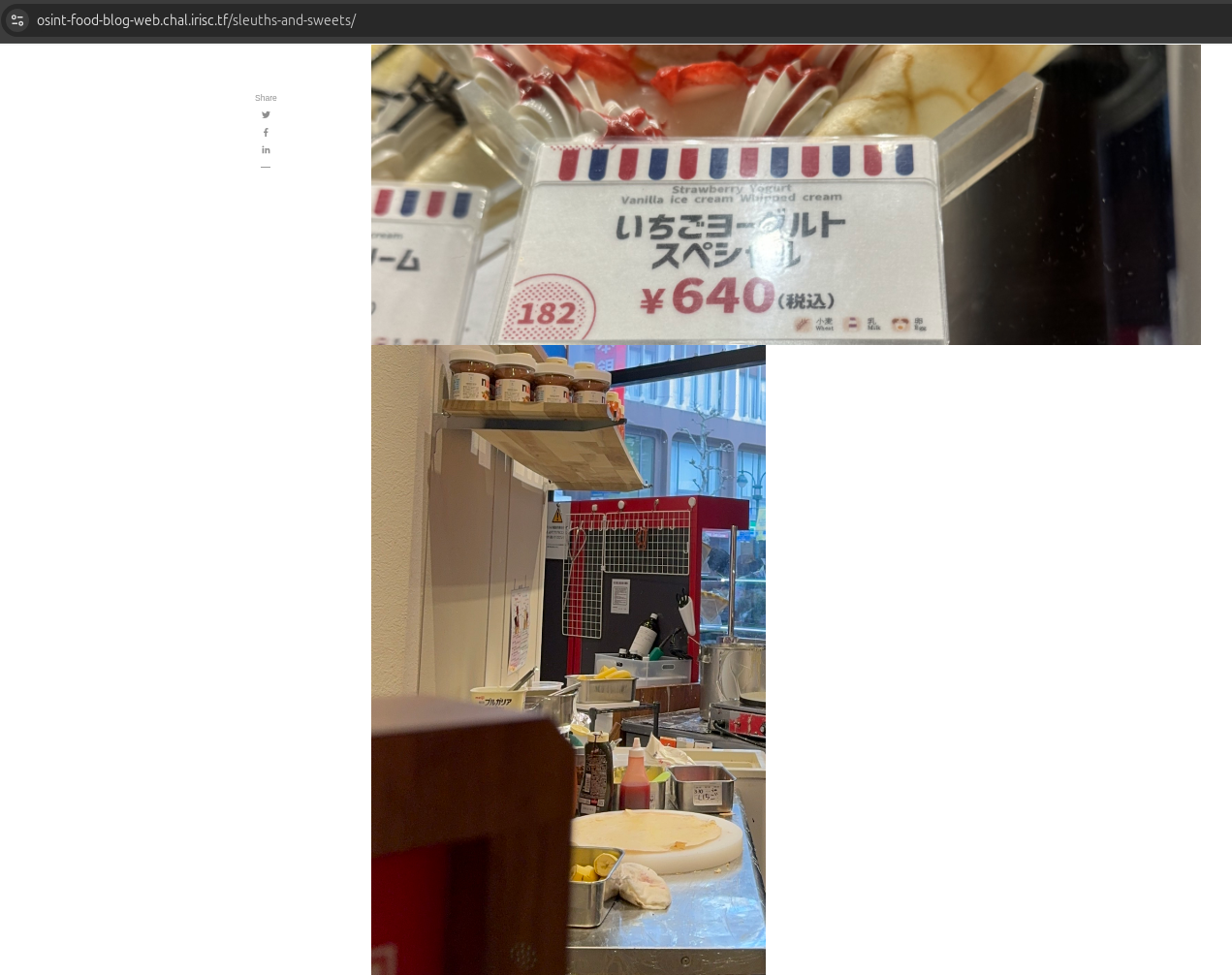

This time, we had 3 images. When I did a Reverse Image Search for the 1st and the 3rd images, I didn’t get much exact information because they looked very common across Japan.

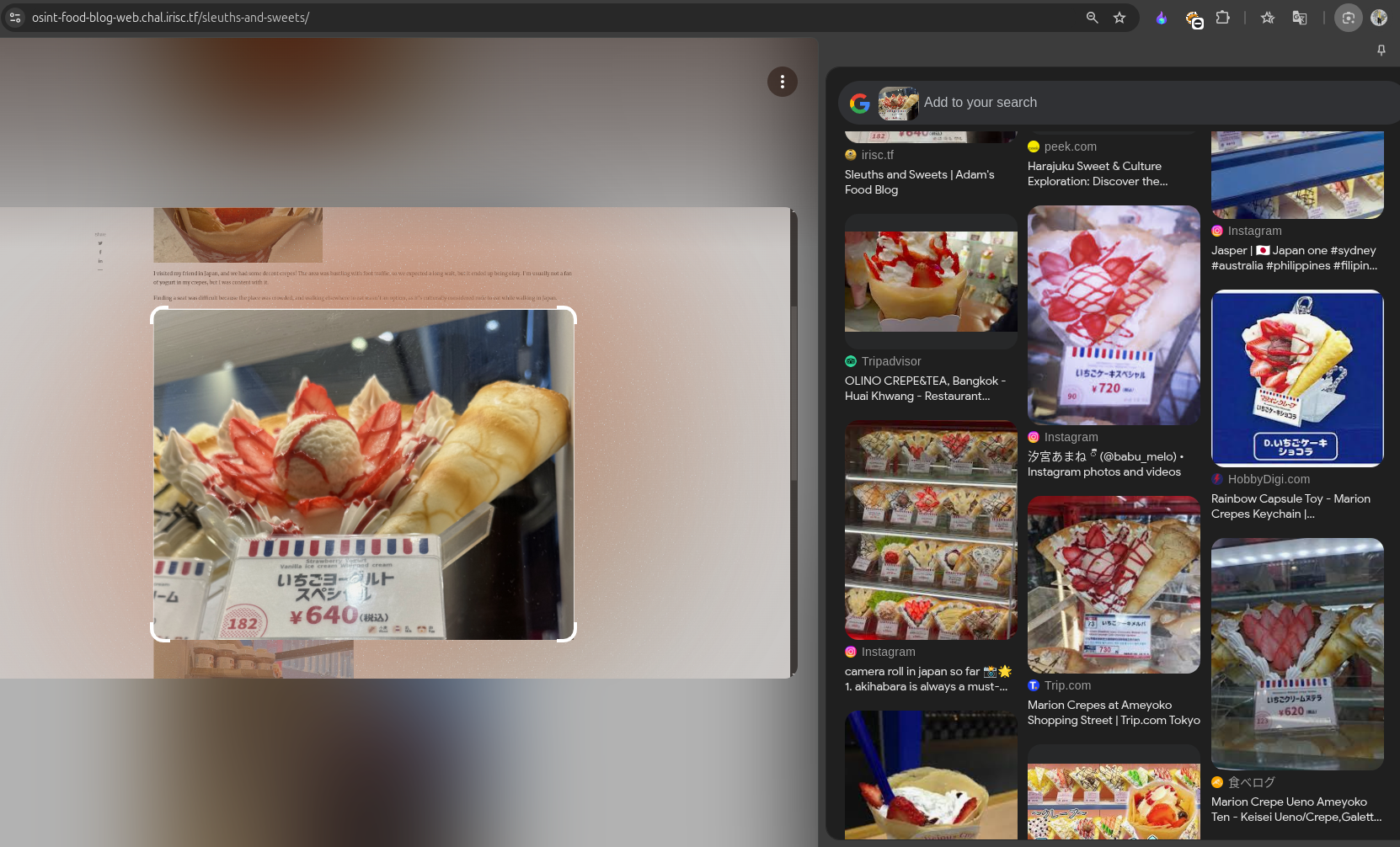

When I did a Reverse Image Search for the 2nd image, I got these results:

Suggesting Marion Crepe Ueno Ameyoko Store, which was not the correct address for the flag.

Exploring other way

So this time, I tried another method. As the description mentioned Foot Traffic, I searched for the place with the highest foot traffic in Japan.

1 | The place with the highest foot traffic in Japan is Shibuya Crossing in Tokyo, which is widely considered the busiest pedestrian crossing in the world, with thousands of people crossing at once during rush hour. |

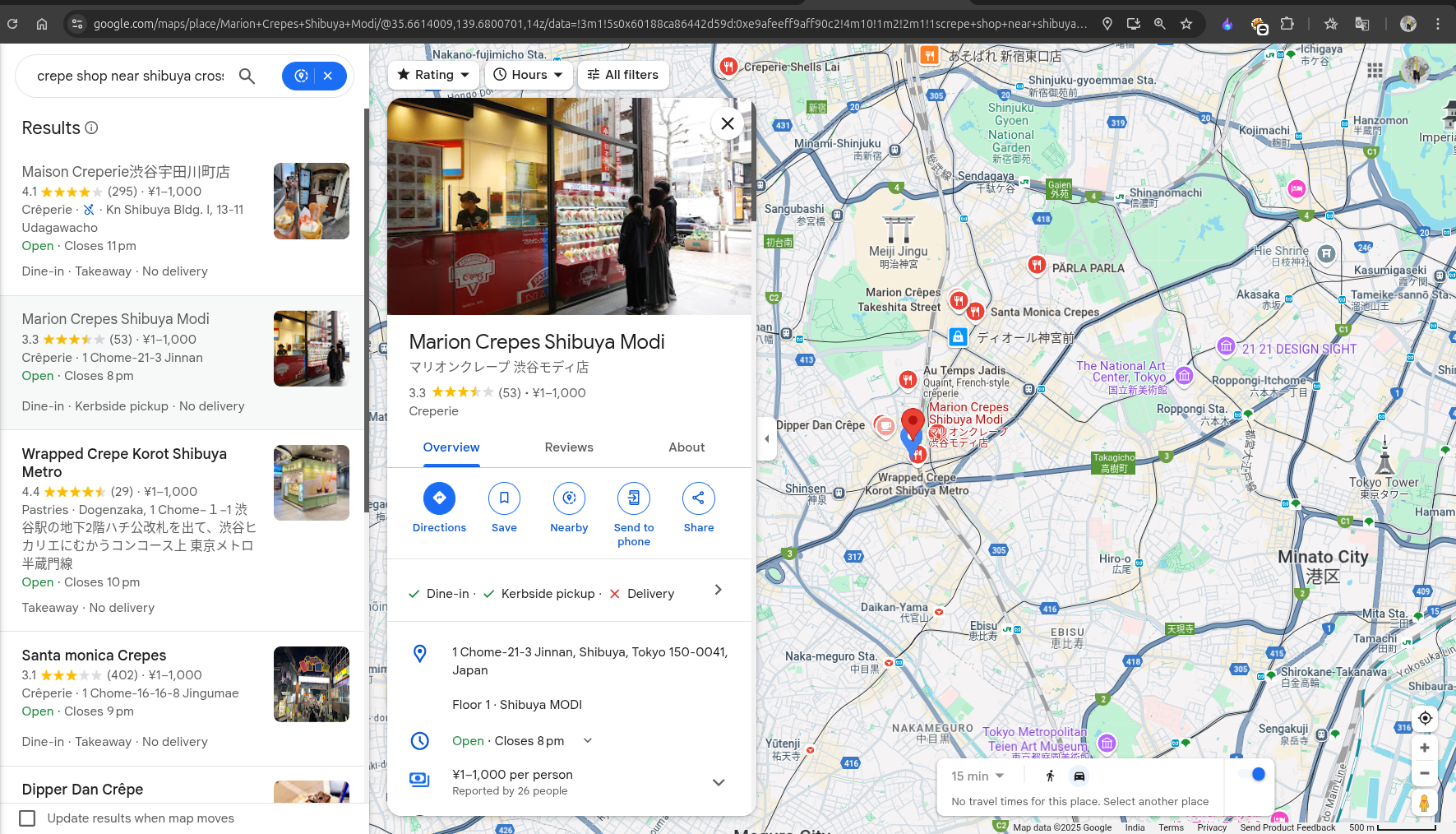

I then refined my search for crepe shops near Shibuya Crossing on Google Maps. After checking several stores, I finally found the correct one, as shown below:

Location: Marion Crepes Shibuya Modi

It had similar images to those given in the blog.

Creating the Flag

With the gathered information, let’s construct the flag using the defined format.

Flag:

1 | irisctf{1_Chome_21_3_Jinnan_Shibuya} |

Another one solved! 🥳

Not Eelaborate

Challenge Description

Blog’s Link: https://osint-food-blog-web.chal.irisc.tf/

Solution

If you came directly to this challenge’s writeup, I will recommend you first read my Checking Out Of Winter writeup because I have made it in detail, specifying some important notes.

So again, let’s search for the keywords. I got these: Title - Eel, Deer Park, Japenese Food, Quiet Restaurant- traces left by the victim that can help us move forward.

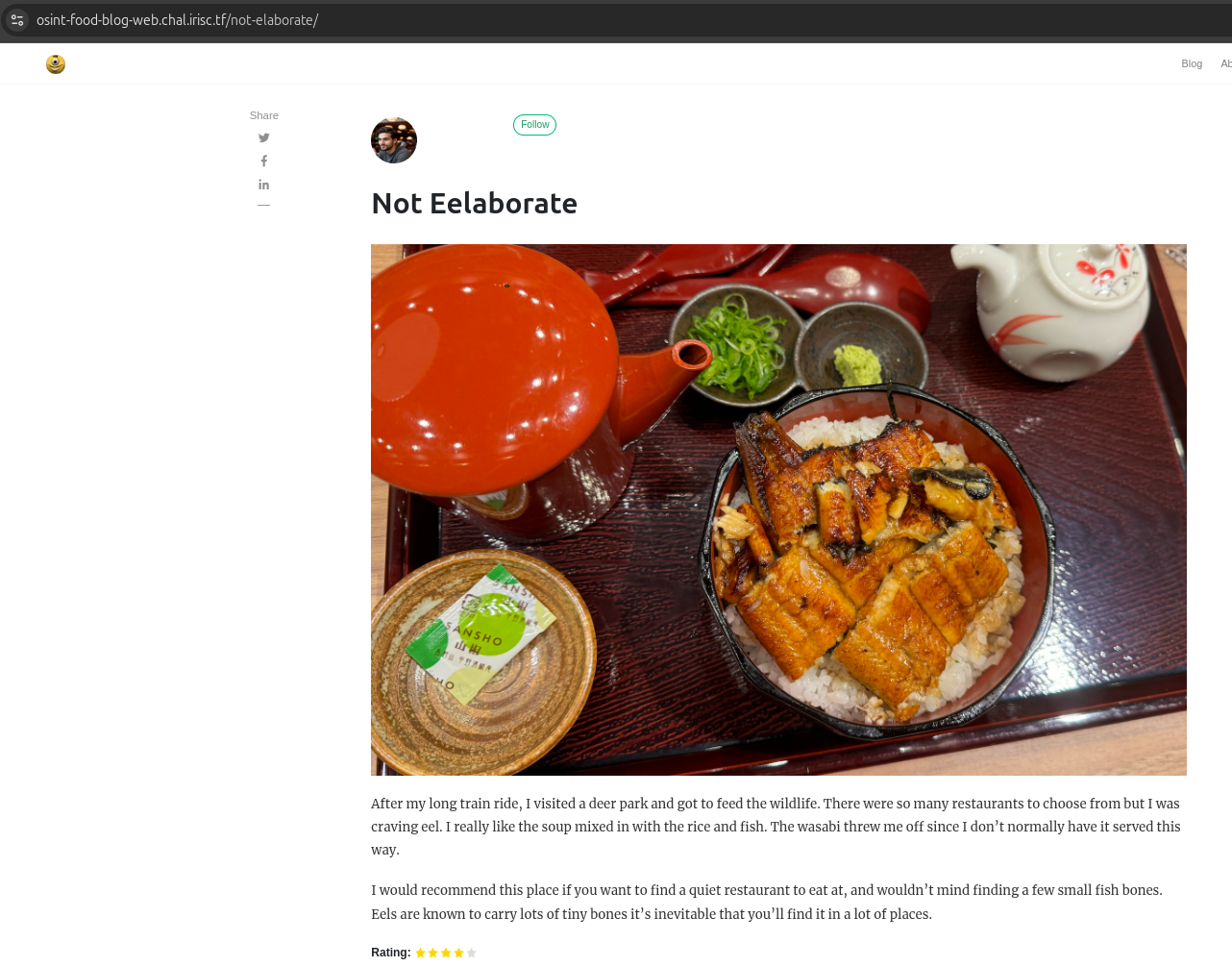

Let’s visit the website for this blog:

So, I started searching for Deer Parks in Japan and found one famous Deer Park, Nara Park, which helped me narrow down my search.

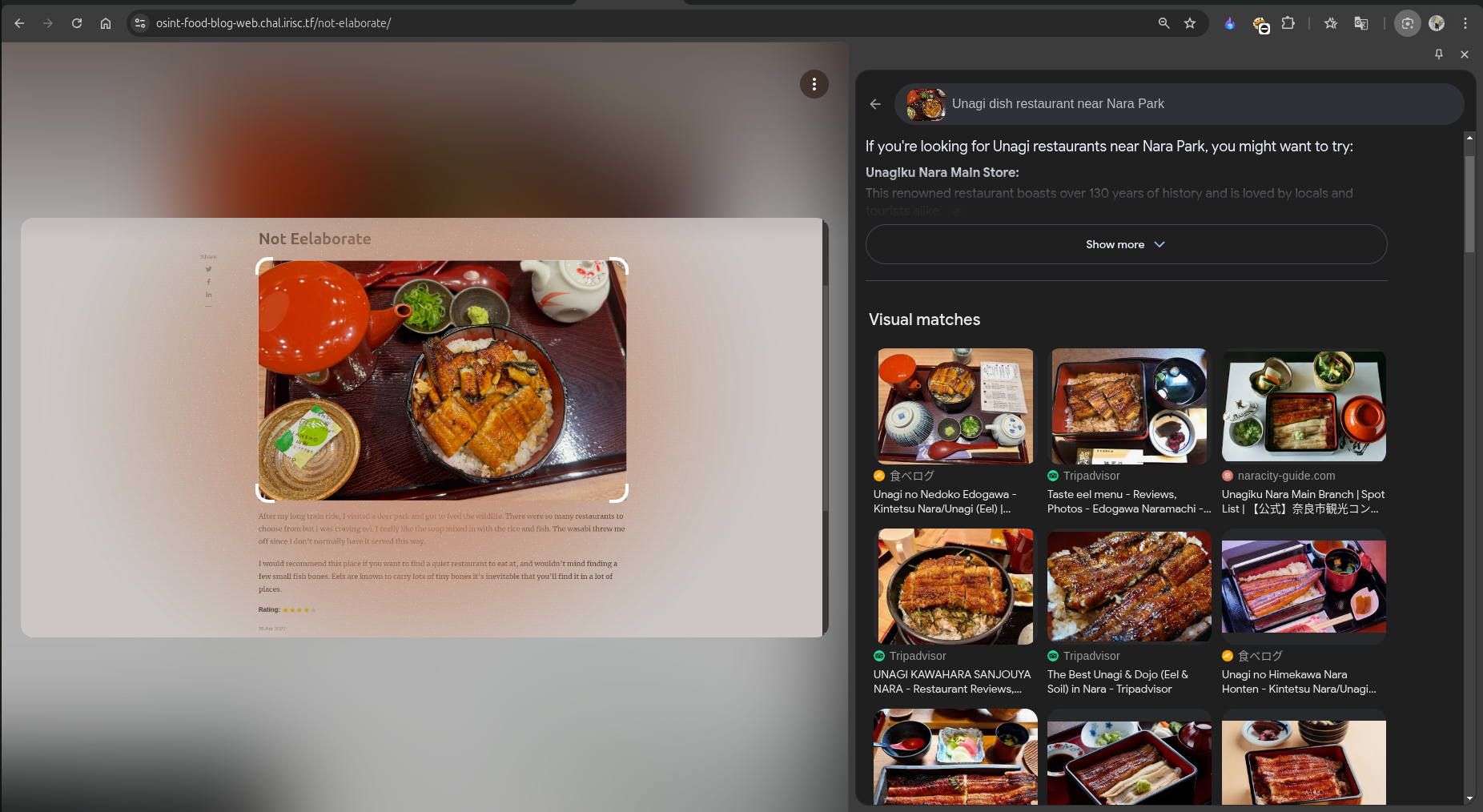

The food dishes mentioned in the blog hinted at the Unagi Dish. I then did a Reverse Image Search for the given image using the prompt Unagi Dish Restaurant near Nara Park. This search suggested some restaurants, and the first restaurant had a similar dish plate, as shown below:

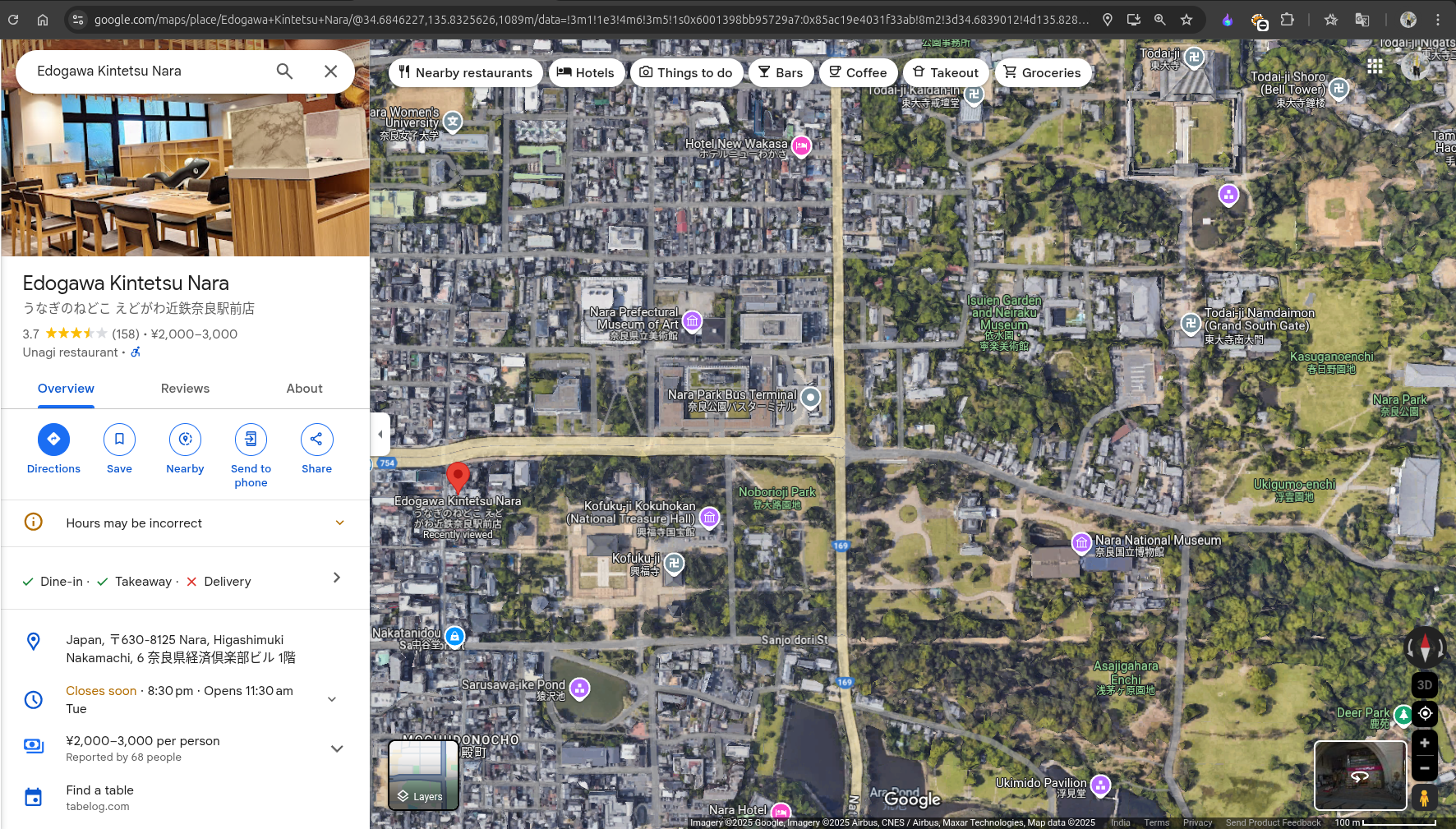

Finally, search for the restaurant on Google Maps, and you will get the correct name, which is located near Nara Park.

Location: Edogawa Kintetsu Nara

Creating the Flag

With the gathered information, let’s construct the flag using the defined format.

Flag:

1 | irisctf{Edogawa_Kintetsu_Nara} |

One more solved!

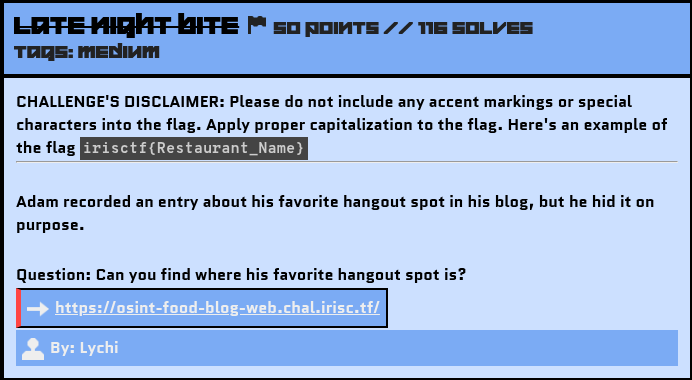

Late Night Bite

Challenge Description

Blog’s Link: https://osint-food-blog-web.chal.irisc.tf/

Solution

If you came directly to this challenge’s writeup, I will recommend you first read my Checking Out Of Winter writeup because I have made it in detail, specifying some important notes.

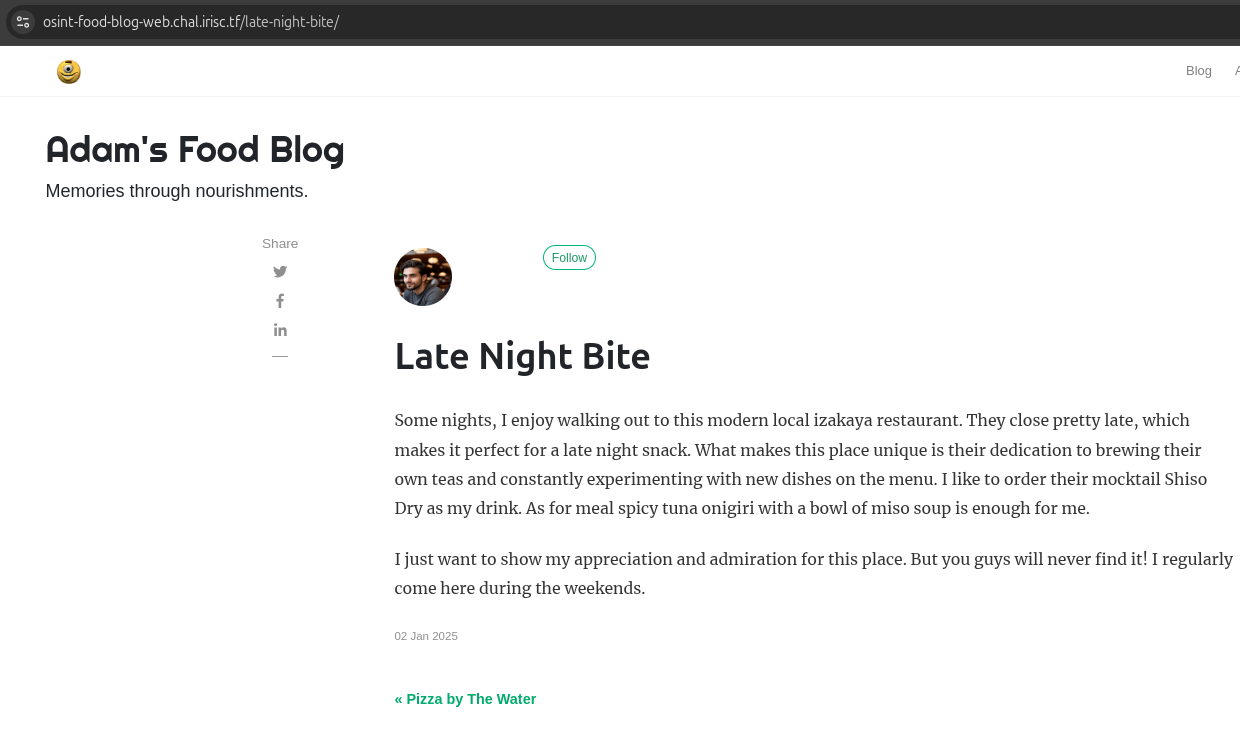

This challenge was a little tricky. Let’s start.

According to the description, Adam hid his favorite hangout spot, which he had recorded in the past.

At first, I thought he didn’t directly mention the Late Night Bite blog, so I explored the other uncovered blogs but didn’t find anything useful related to the challenge.

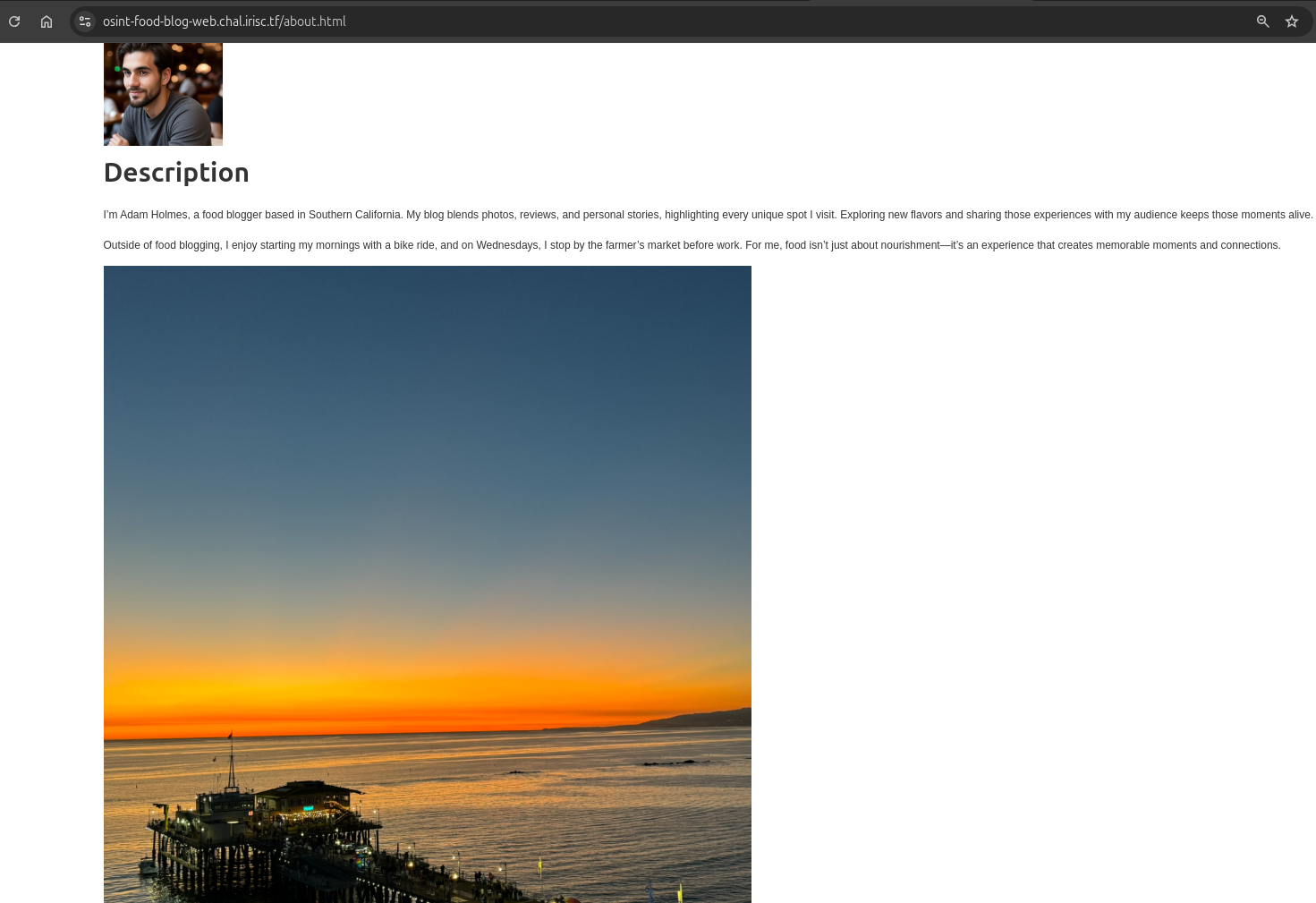

Then, I went to the About section of Adam’s site.

In the About section, I noticed that Adam is a food blogger based in Southern California and that on Wednesdays, he stops by the farmer’s market before work.

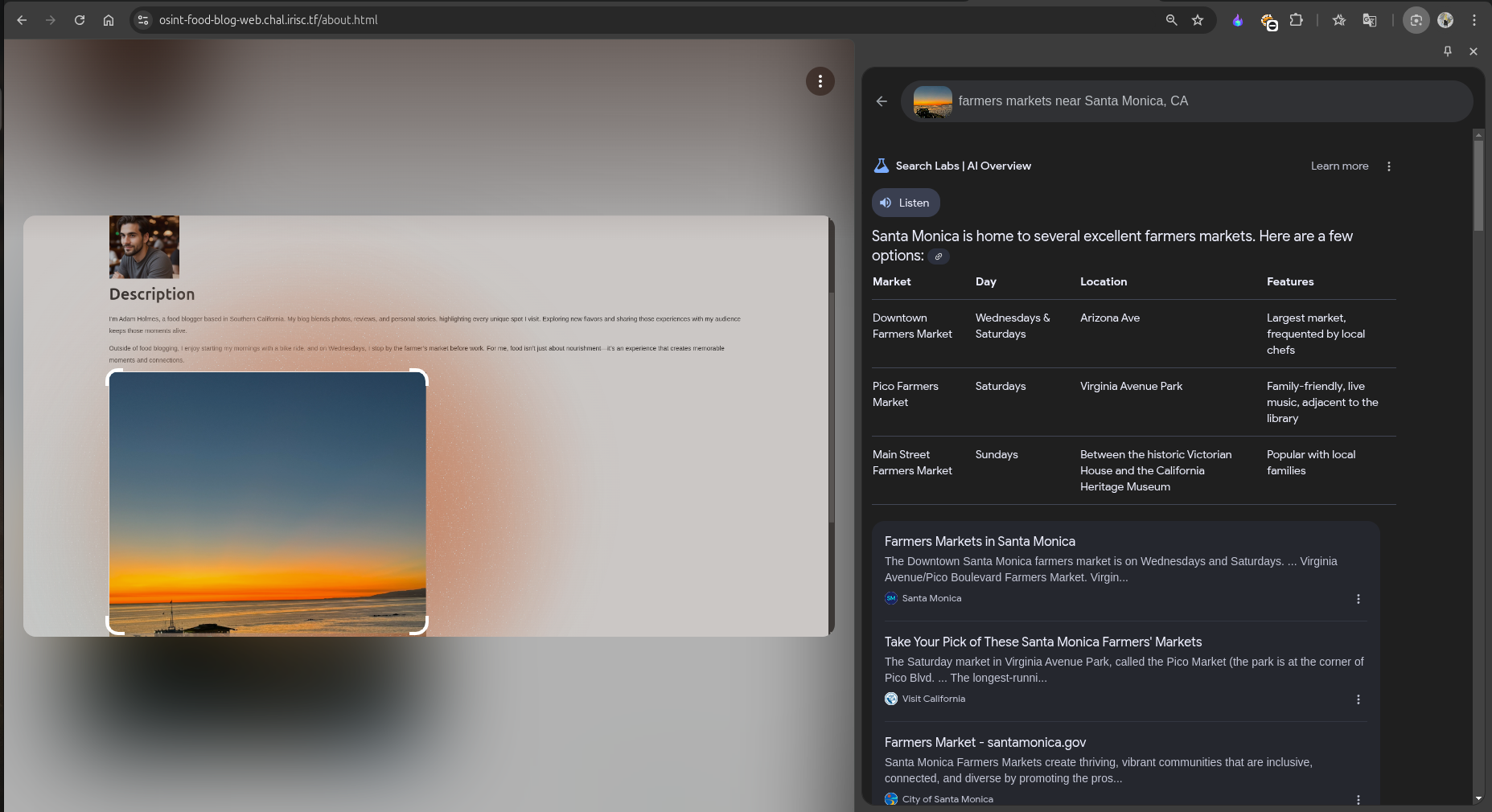

I speculated that the farmer’s market might be the nearest location around his house. When I did a Reverse Image Search on the image provided in the About section, I realized the location was Santa Monica Pier.

I then did another Reverse Image Search with the prompt:

I believed Adam was talking about the Downtown Farmers Market because of the Wednesday mention.

But I still didn’t have any clue about the restaurant’s name, so I started searching through the blog on his site again. Since I have some foundational knowledge of web dev/exp, I began by viewing the Page Source. In the source, Adam mentioned this line:

1 | <!-- MY SUPER SECRET POST: "late night bite" --> |

Then, I tried the robots.txt endpoint – https://osint-food-blog-web.chal.irisc.tf/robots.txt.

There, I found a mention of: Sitemap: /sitemap.xml.

I went to this endpoint and discovered every endpoint related to his blogs. After scrolling, I found a mention of the Late Night Bite blog as:

1 | <url> |

So, finally, I found the blog that was hidden by Adam.

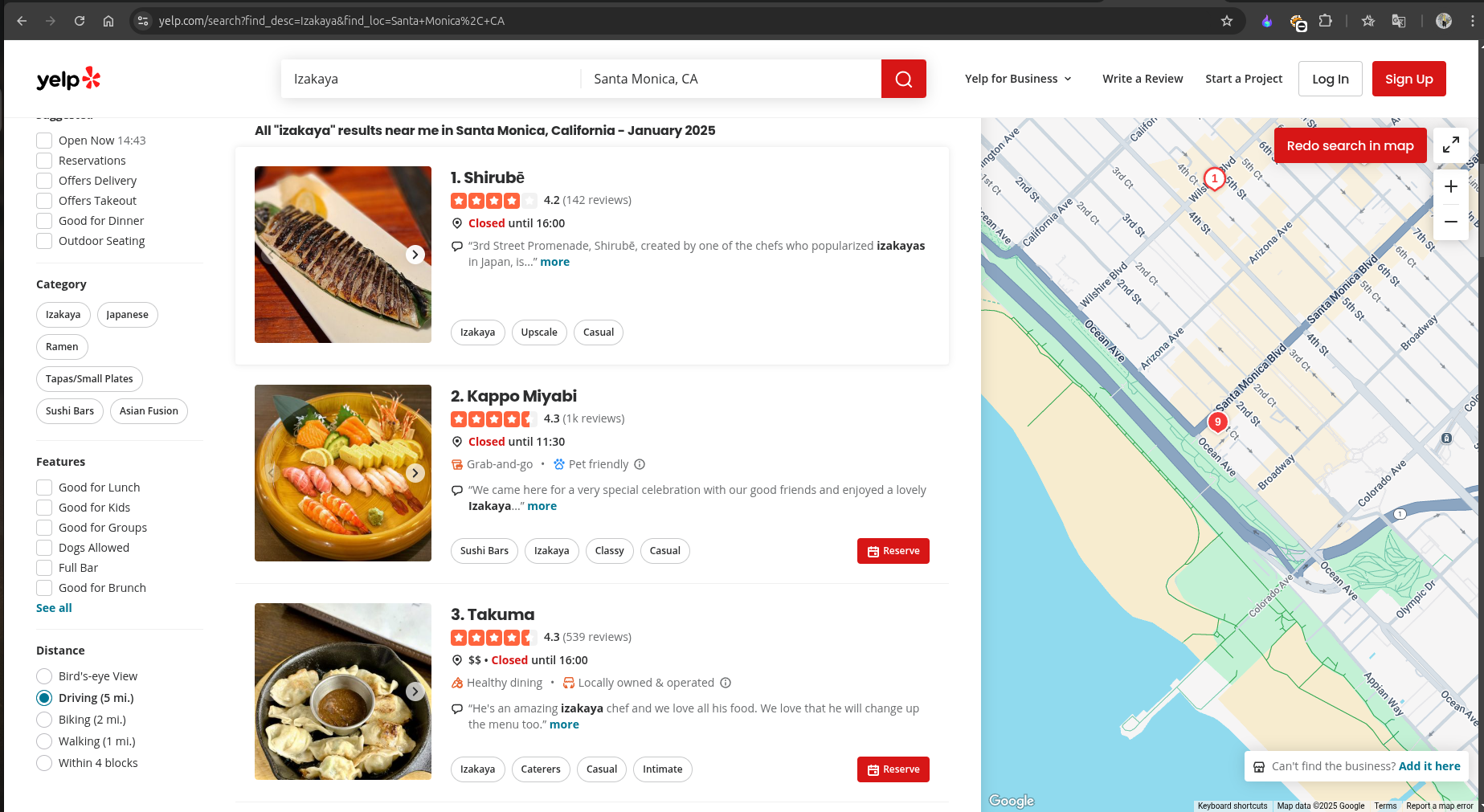

So, I quickly searched for a Modern Local Izakaya Restaurant with those features near Santa Monica on Google.

I found this helpful site:

The first restaurant, Shirubē, was near the Downtown Farmers Market, and it also had the features mentioned by Adam.

Creating the Flag

With the gathered information, let’s construct the flag using the defined format.

Flag:

1 | irisctf{Shirube} |

This marks the last challenge based on the Food Blog website. Thanks to lychi for creating this amazing challenge; I really enjoyed it.

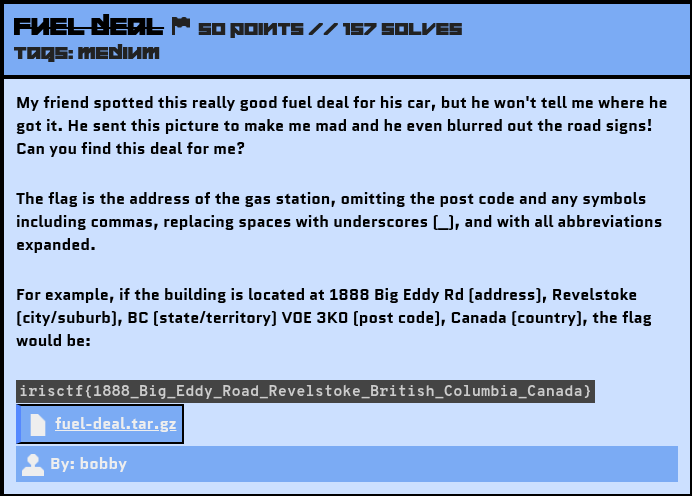

Fuel Deal

Challenge Description

Source File: fuel-deal.tar.gz

Solution

If you came directly to this challenge’s writeup, I will recommend you first read my Checking Out Of Winter writeup because I have made it in detail, specifying some important notes.

So again, let’s search for the keywords. I got this: Good Fuel Deal.

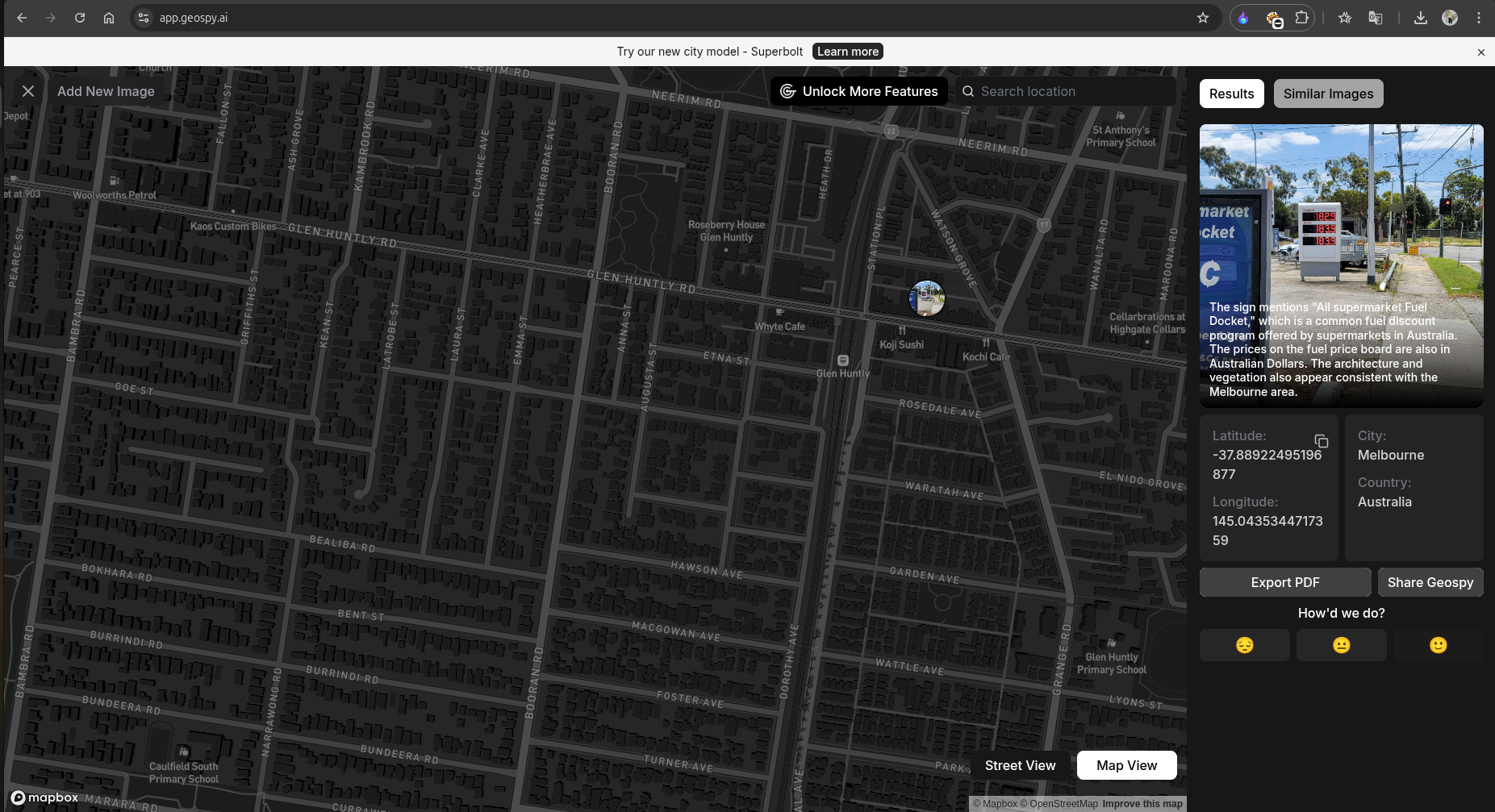

I did a Reverse Image Search but didn’t get much of a lead. Then, I tried one of the cool tools, GeoSpy AI, which showed me this result:

Not every time does this AI give you the correct location, but since there were mentions of Australia in my Google Image Search, I decided to try the suggested location, Melbourne, Australia.

I then searched on Google with the query: Where were the cheap fuels (with the rate price mentioned in the provided image) in Melbourne? and got some of these results:

1 | The cheapest diesel available in Victoria costs 183.9 cents per litre. |

Then found this helpful site : https://www.carexpert.com.au/car-news/the-cheapest-petrol-and-diesel-around-australia-this-week\

The above site had these rates :

1 | Victoria- |

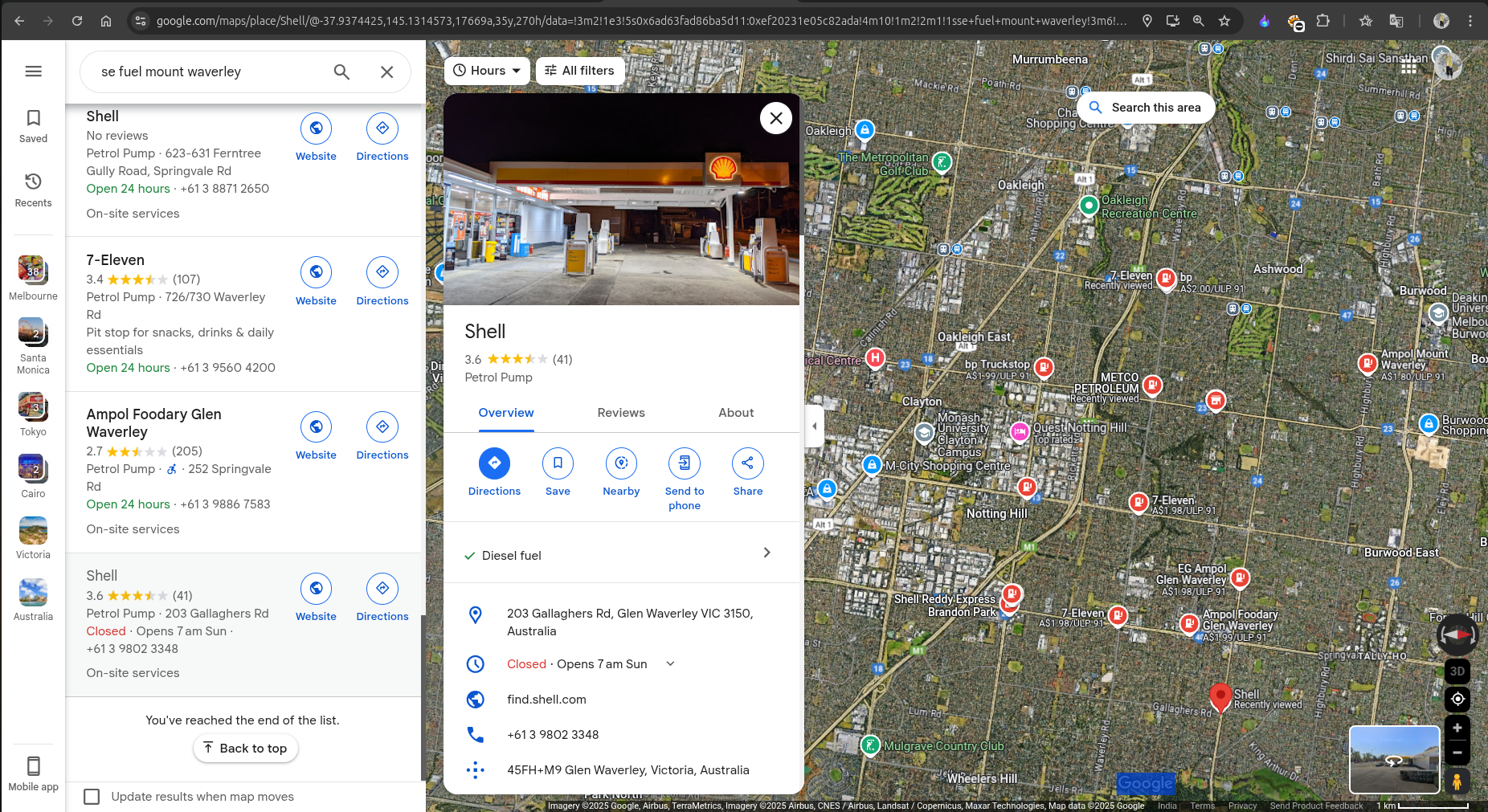

I started searching with all the locations mentioned above from the beginning. Finally, when I was searching for the SE Fuel Mount Waverly location on Google Maps, I found some of these fuel stations:

After scrolling down, I identified that the Shell station was the desired fuel station. If you check the photos or the 360-degree view of that location, it was identifiable with the provided image.

Location : Shell

Creating the Flag

With the gathered information, let’s construct the flag using the defined format.

Flag:

1 | irisctf{203_Gallaghers_Road_Glen_Waverley_Victoria_Australia} |

Woah!!!

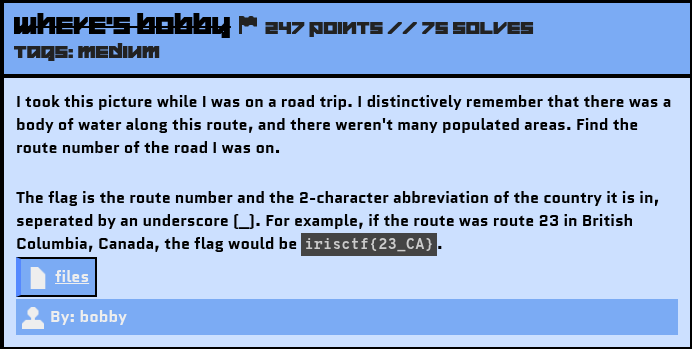

Where’s Bobby

Challenge Description

Source File: files

Hints releasd on Discord Announcements:

Solution

If you came directly to this challenge’s writeup, I will recommend you first read my Checking Out Of Winter writeup because I have made it in detail, specifying some important notes.

So again, let’s search for the keywords. I got these: Road Trip, Water Body, Not much populated areas - traces left by the author.

Provided Image:

I like the view of the image. This image was taken from a car on a road that appears to be in the middle of the mountains.

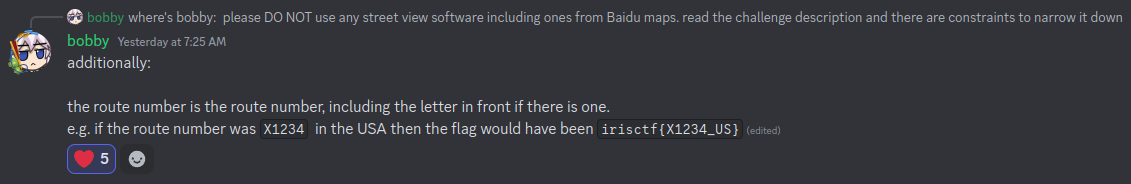

When we zoom in on the image, we can see something written in Chinese on the board. I checked it using Google Translator:

Translated Text in the above image:

1 | Smooth traffic from Xishatun Bridge to Louzizhuang Bridge. |

The board was showing the traffic conditions of the upcoming bridges, which means the author was about to enter this bridge via the connected roads from where they were coming.

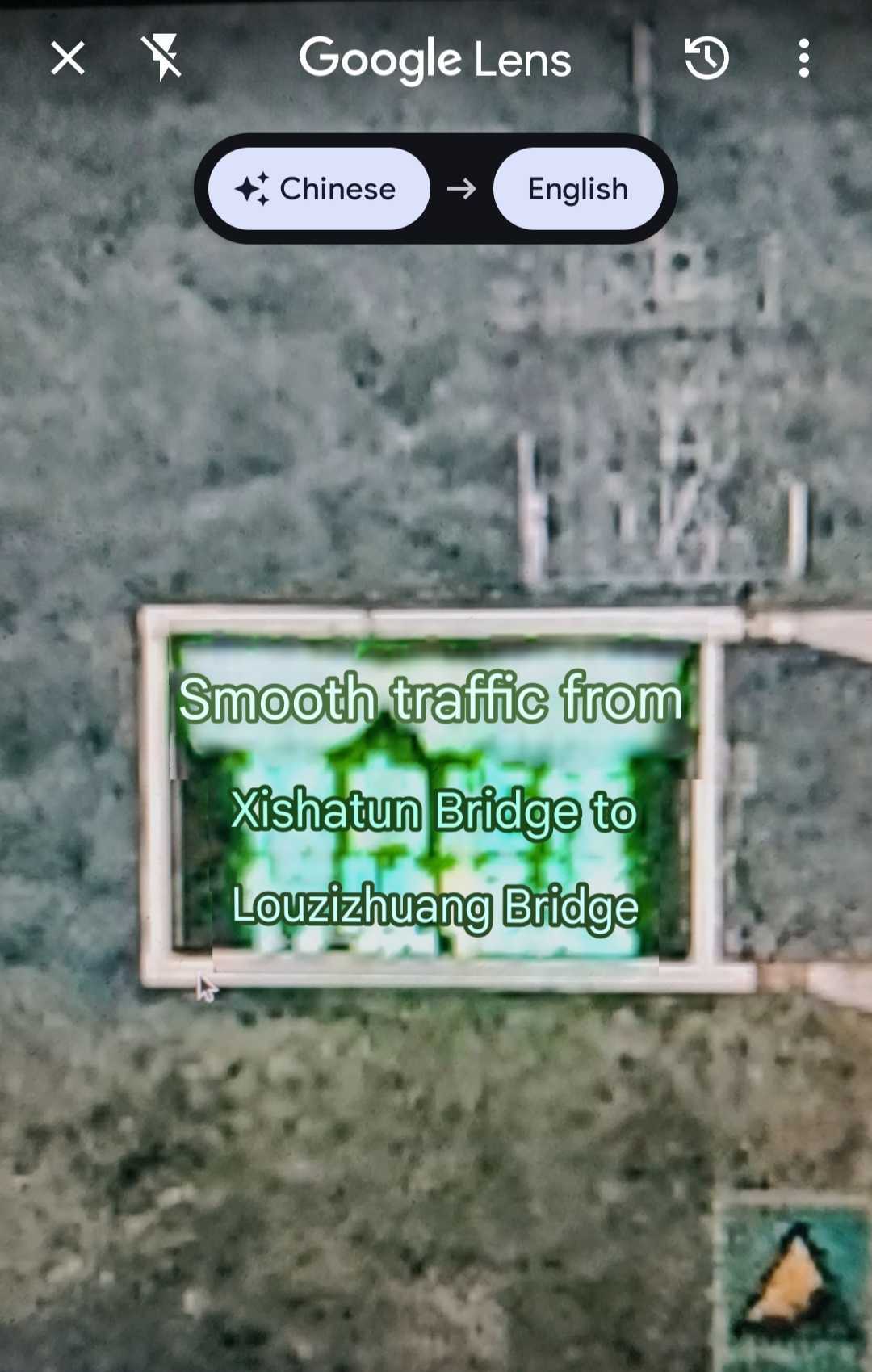

The possible routes near these two bridges are:

G7, G6, G4501, S216, S321, S3801.

As the author mentioned the surroundings of the route as Water Body, Not much populated areas, the road in the provided image was surrounded by mountains.

With these specifications, I started matching the description with the route numbers found earlier:

- I couldn’t find a water body around G7.

- G6 was heavily populated.

- G4501 was not deep into the mountains.

- S216 and S321 were primarily in city regions.

- Lastly, S3801 had water bodies and very sparsely populated areas around it, which led me to consider it for flag verification.

Creating the Flag

With the gathered information, let’s construct the flag using the defined format.

Hooray! It was the correct flag!!!

Flag:

1 | irisctf{S3801_CN} |

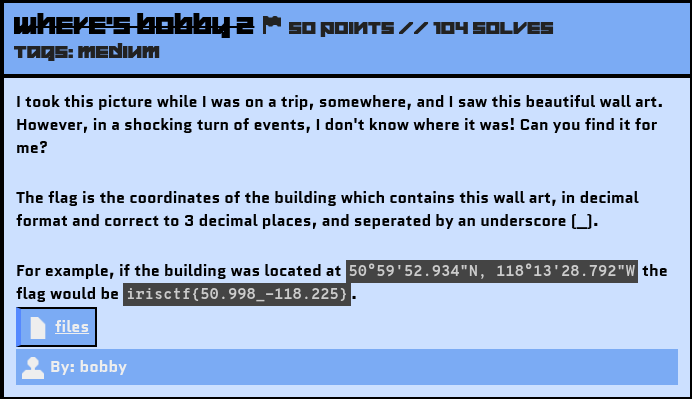

Where’s Bobby 2

Challenge Description

Source File: files

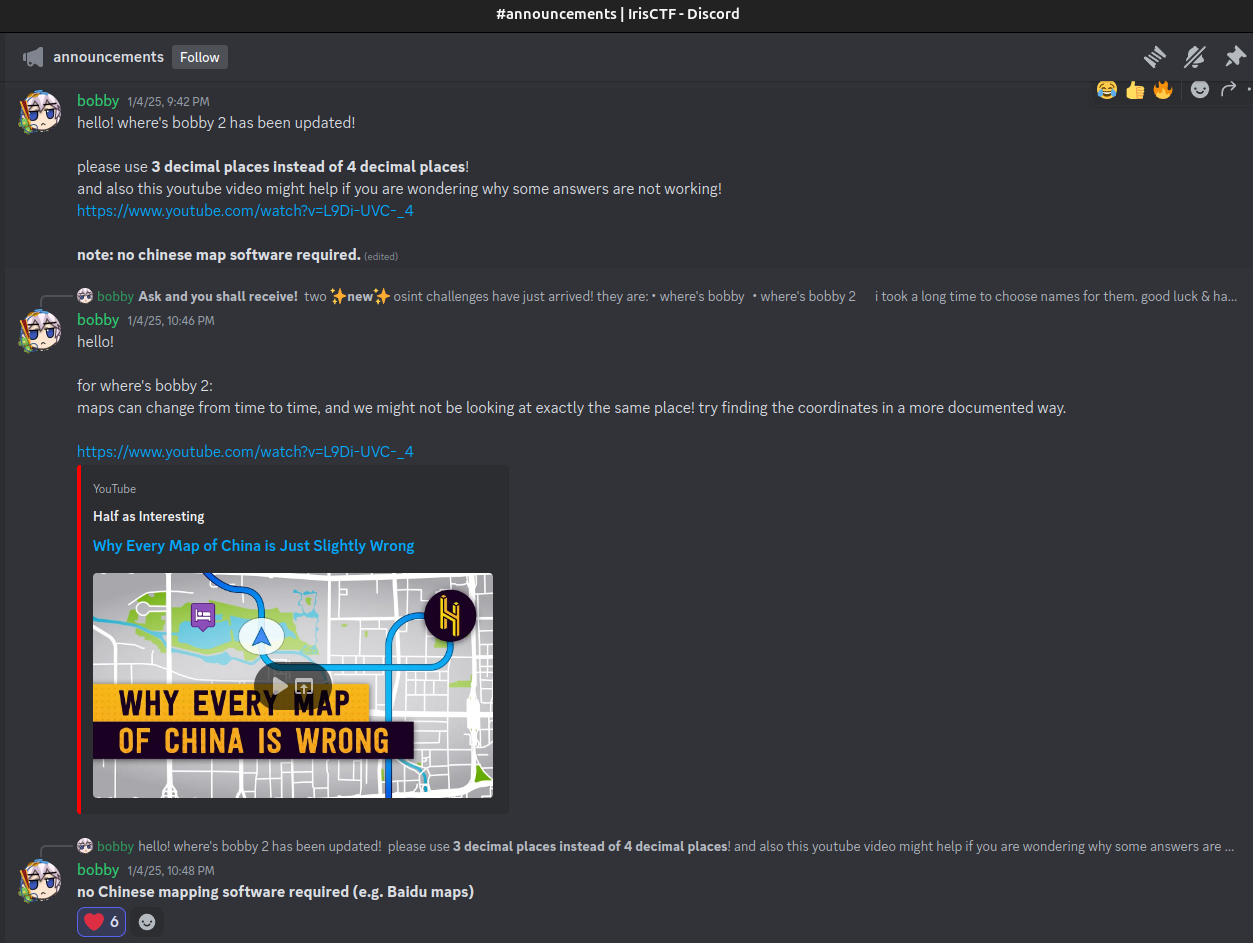

Hints released on Discord Announcements:

Youtube Video Link: https://www.youtube.com/watch?v=L9Di-UVC-_4

Solution

If you came directly to this challenge’s writeup, I will recommend you first read my Checking Out Of Winter writeup because I have made it in detail, specifying some important notes.

Provided Image:

I did a Reverse Image Search using Google Lens and found these helpful sites:

1 | - https://ameblo.jp/yorinoto/entry-12733913890.html |

After exploring these sites, I discovered that the location name is Bai Zi Wan Subway Station, and the wall art is "Hundred Ziwan Kissho" / "The Hundred Children Playing in Baizi Bay" painting.

The Challenge with Finding the Coordinates

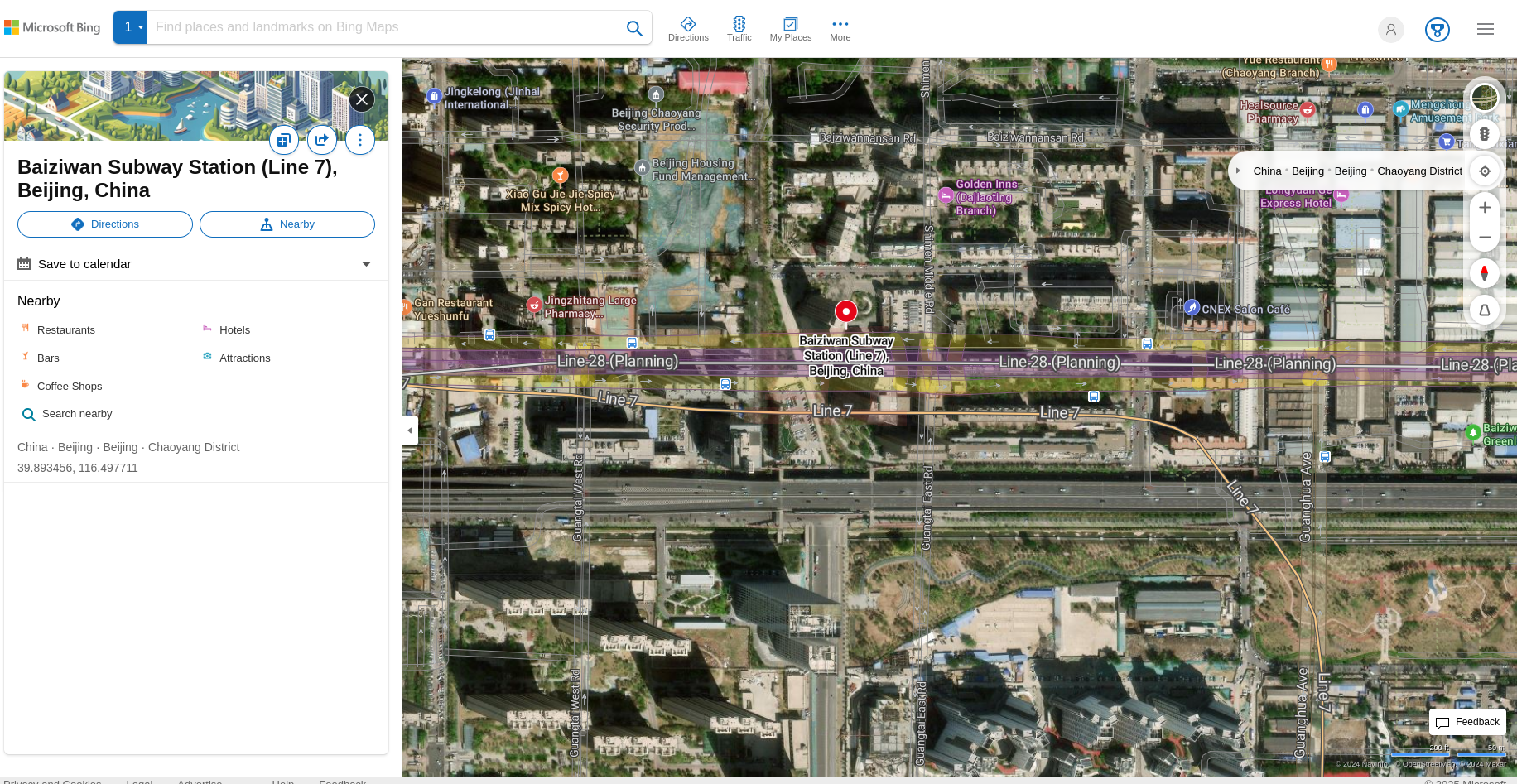

Finding the exact coordinates was tough for non-Chinese users because all locations on Google Maps for China are shifted by a few units. You can learn more about this issue from the provided YouTube video.

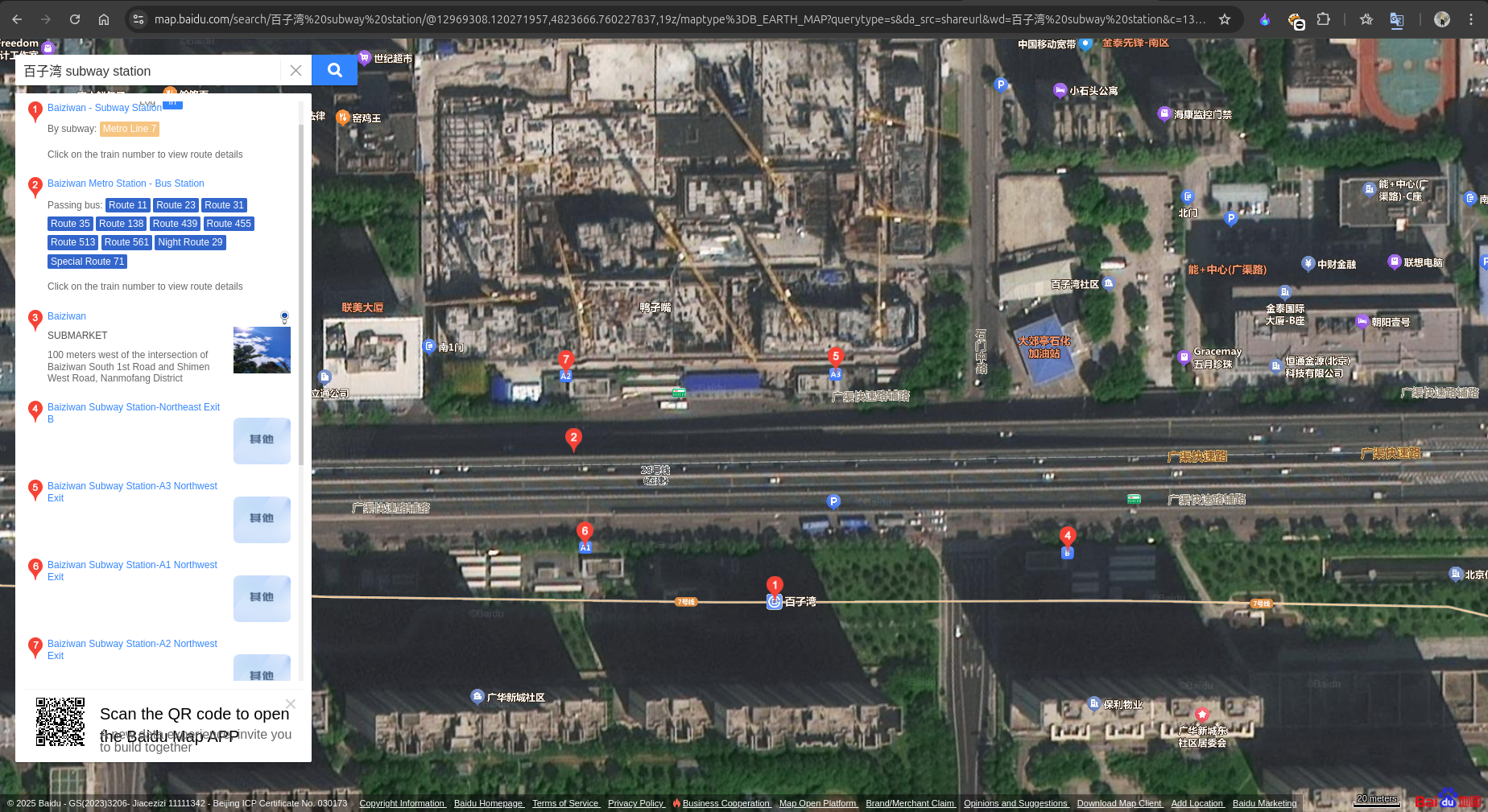

Although it was mentioned not to use Chinese maps or software, I took a look on the Baidu Map.

As shown, the main station and the exit buildings are located at the bottom part of the image.

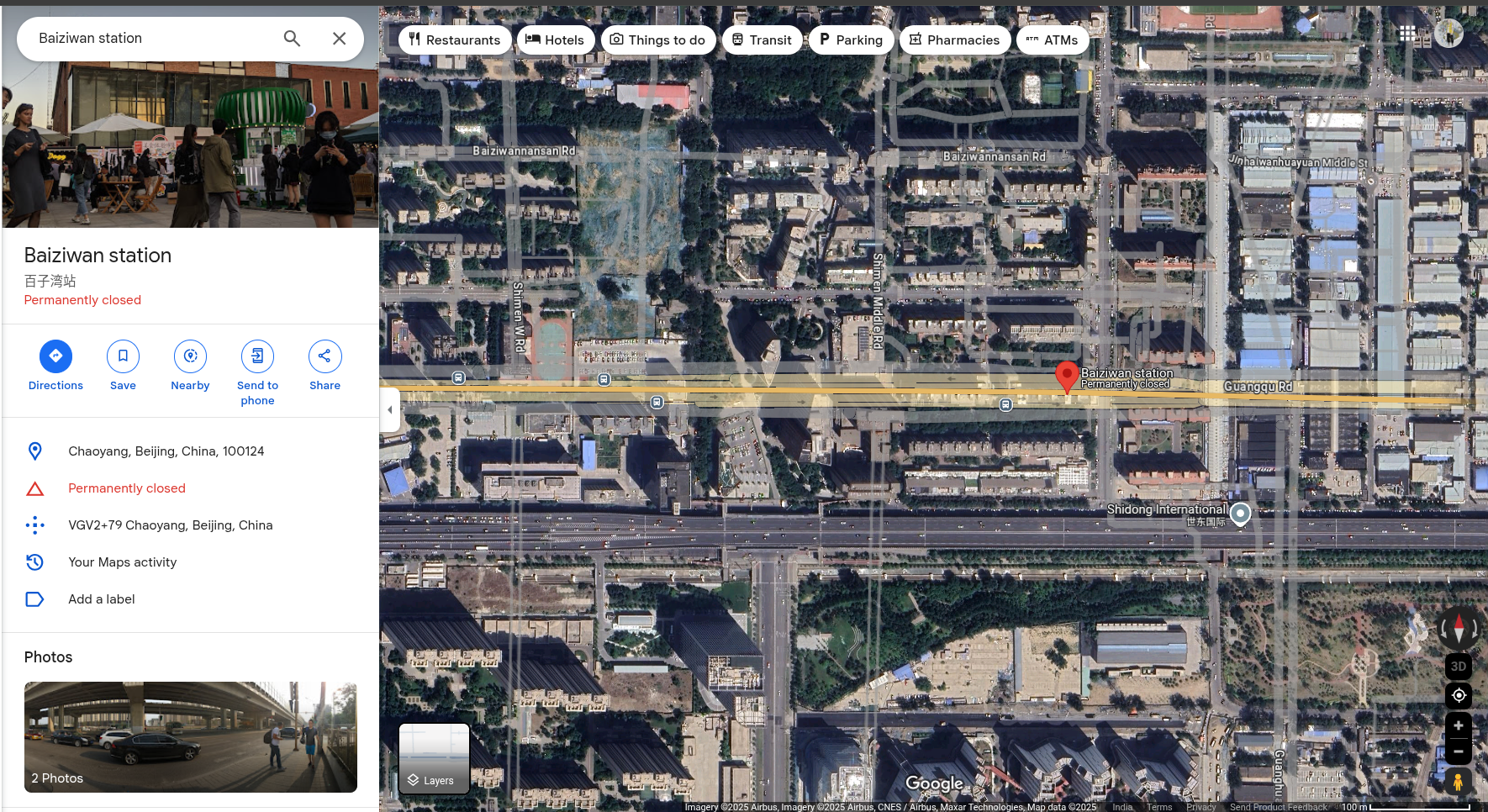

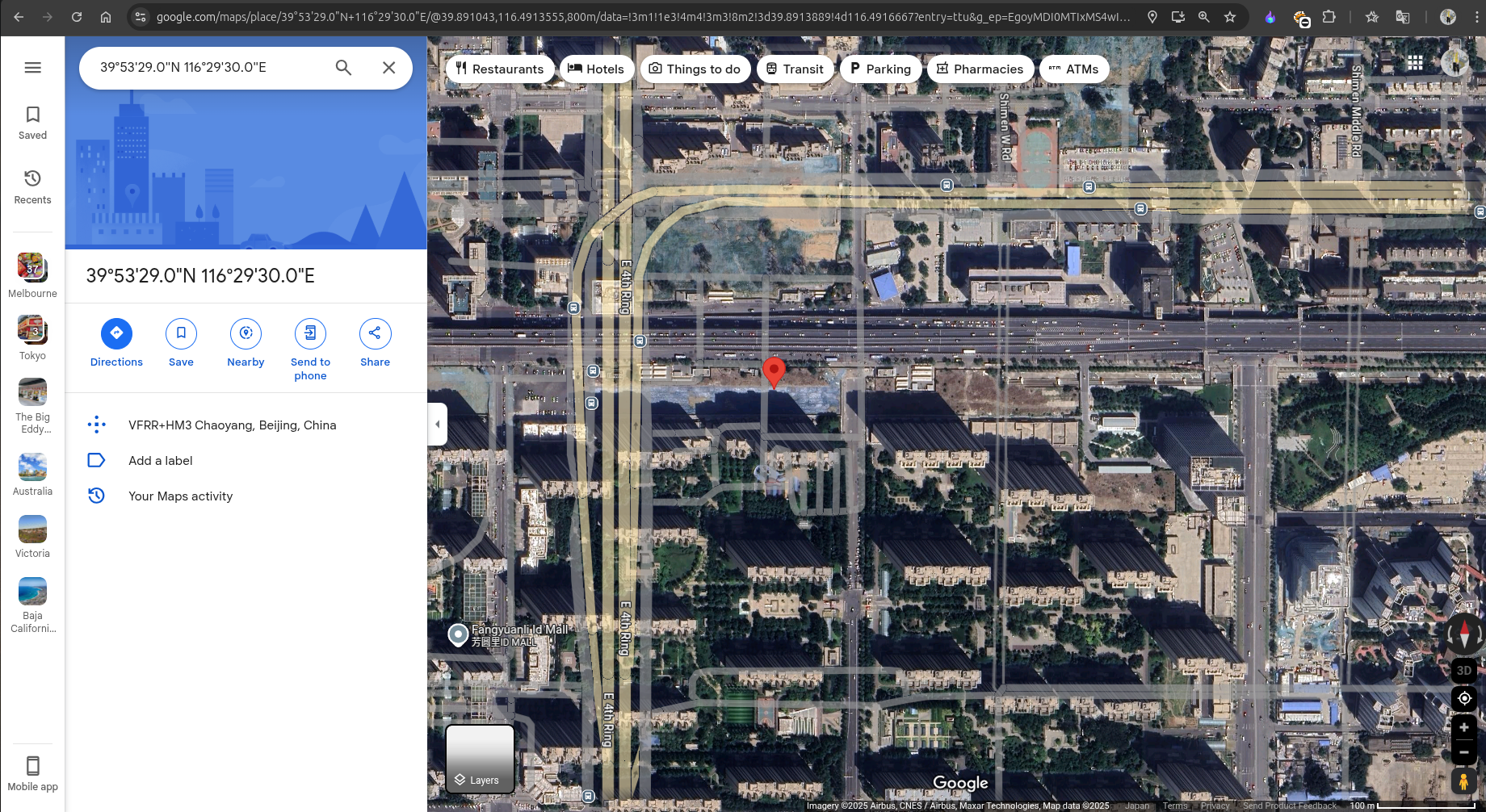

When I checked Google Maps, I got this result:

The coordinates were shifted, and submitting them didn’t yield the correct result.

I also tried the English version of Wikipedia, which had the coordinates for the station, but again, they were incorrect. Wikipedia then directed me to Bing Maps:

However, the coordinates from Bing Maps were also inaccurate.

Exploring Other Sources

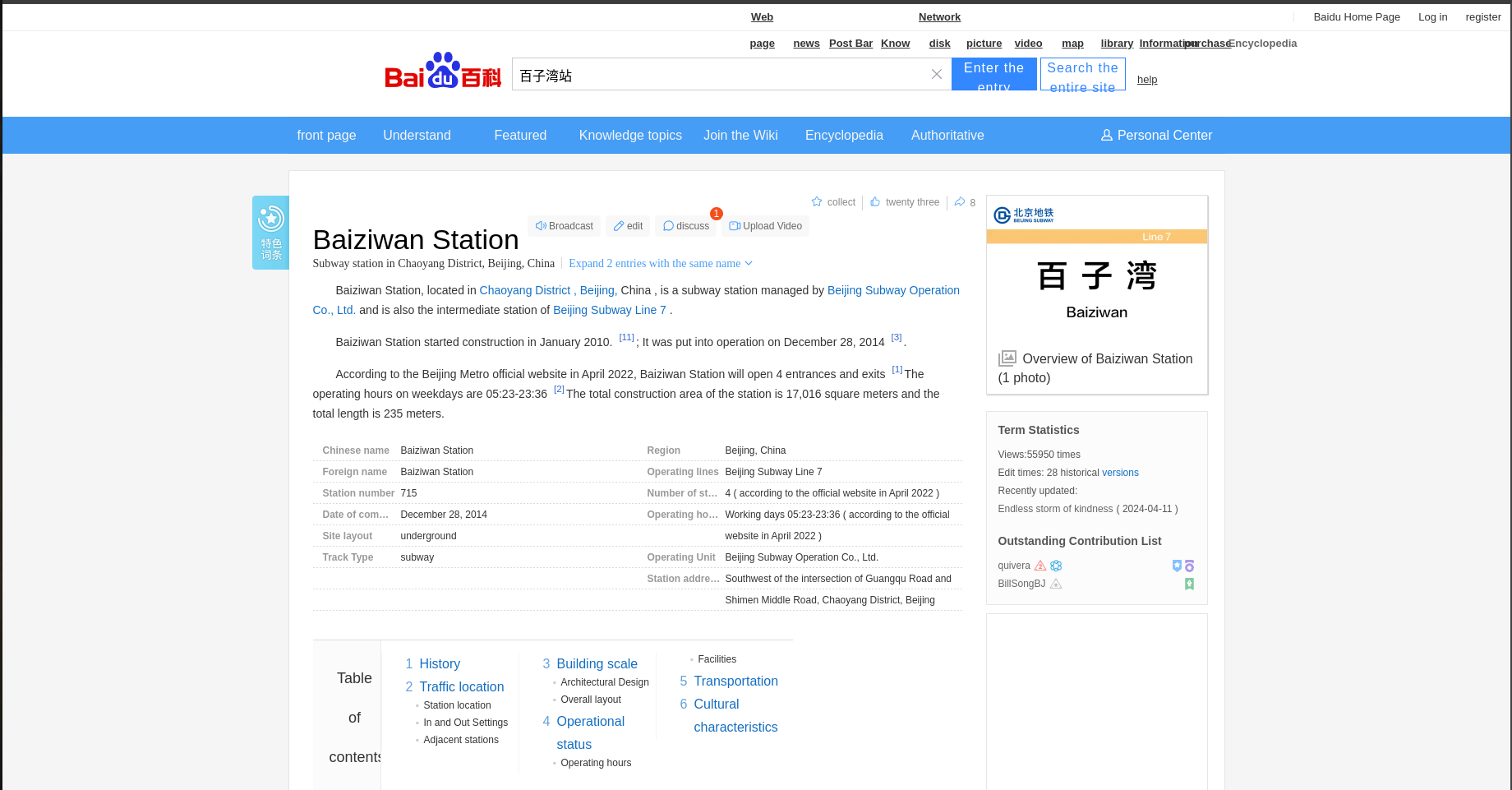

Next, I started searching for documented articles that might include the exact coordinates for the station. I looked at the Baidu Encyclopedia site:

Unfortunately, it didn’t mention any coordinates.

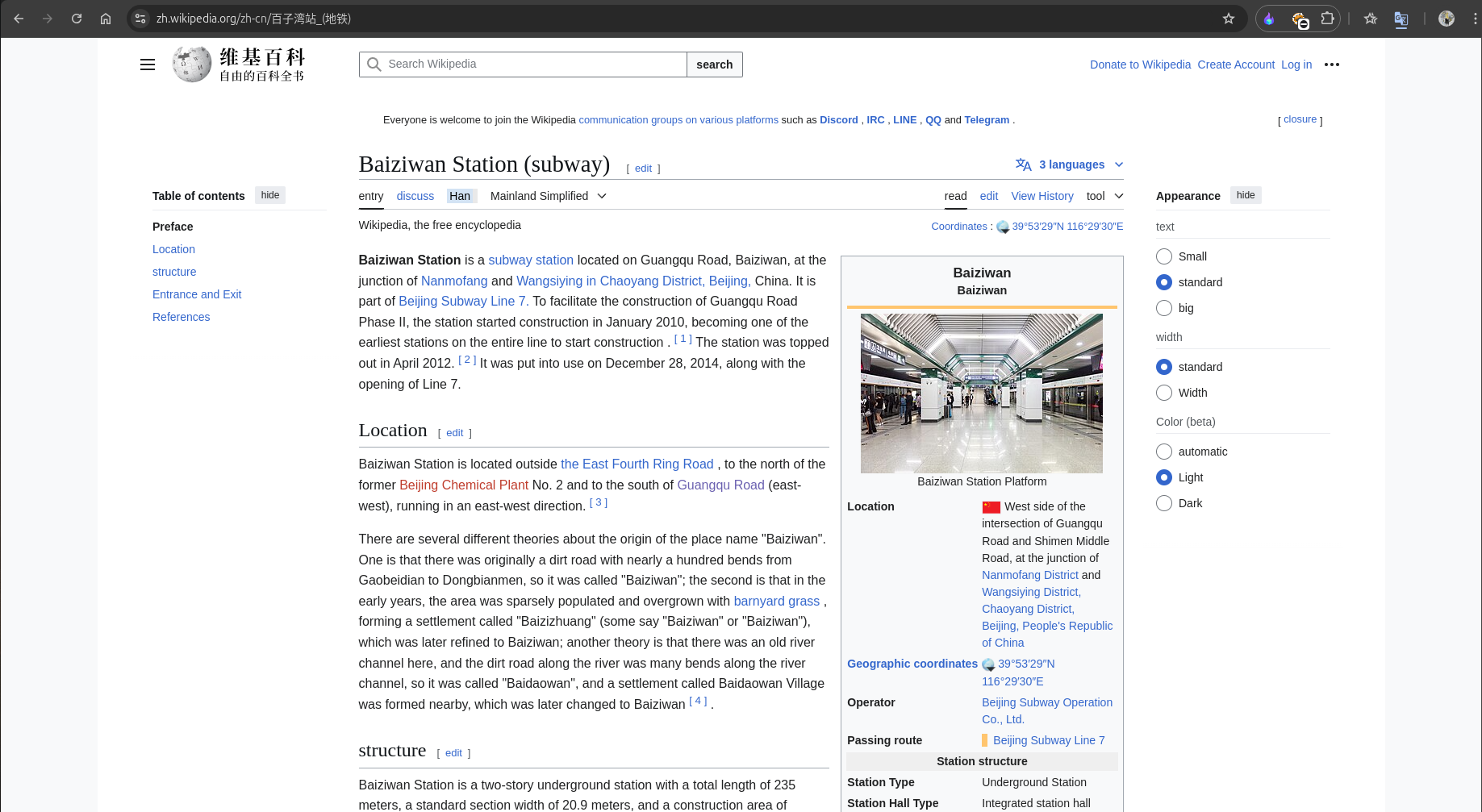

Finally, after more extensive searching 😵💫, I found the Chinese-language version of Wikipedia, specifically tailored for Simplified Chinese characters (used in Mainland China and Singapore).

Link: https://zh.wikipedia.org/zh-cn/%E7%99%BE%E5%AD%90%E6%B9%BE%E7%AB%99_(%E5%9C%B0%E9%93%81)

This page included the coordinates.

Verifying the Coordinates

I quickly entered the coordinates on Google Maps. Although the result was still shifted from the exact building, I refined the search as shown below:

Coordinates: 39.891389, 116.491667

I rounded off the coordinates to 3 decimal points: 39.891, 116.492.

After submitting these refined coordinates, I confirmed that they were the desired ones for this challenge. 😮💨

Flag:

1 | irisctf{39.891_116.492} |

This marks the last challenge based on the files. Thanks to bobby for creating this amazing challenge; I really enjoyed it.

Forensics

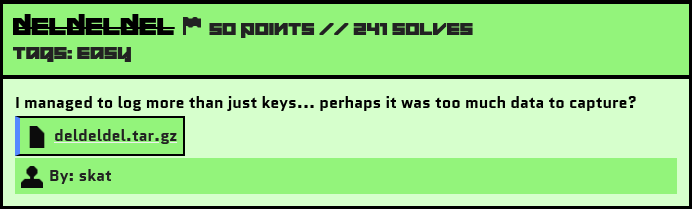

Deldeldel

Challenge Description

Source File: deldeldel.tar.gz

Solution

1 | $ file klogger.pcapng |

We received a .pcapng file for this challenge. When I opened it in Wireshark, the packets were exclusively based on the USB protocol.

The mention of “keys” and the file name klogger (key logger) hinted that this was likely a USB Keyboard/Keypad challenge.

USB Protocol challenges are a standard type in the Forensics category of CTFs and often repeat with slight modifications. The goal is to extract the USB HID data or CAP data available at the bottom of the packets, which contains useful information about the user’s activity on the USB device.

Sources:

1 | http://www.usb.org/developers/hidpage/ |

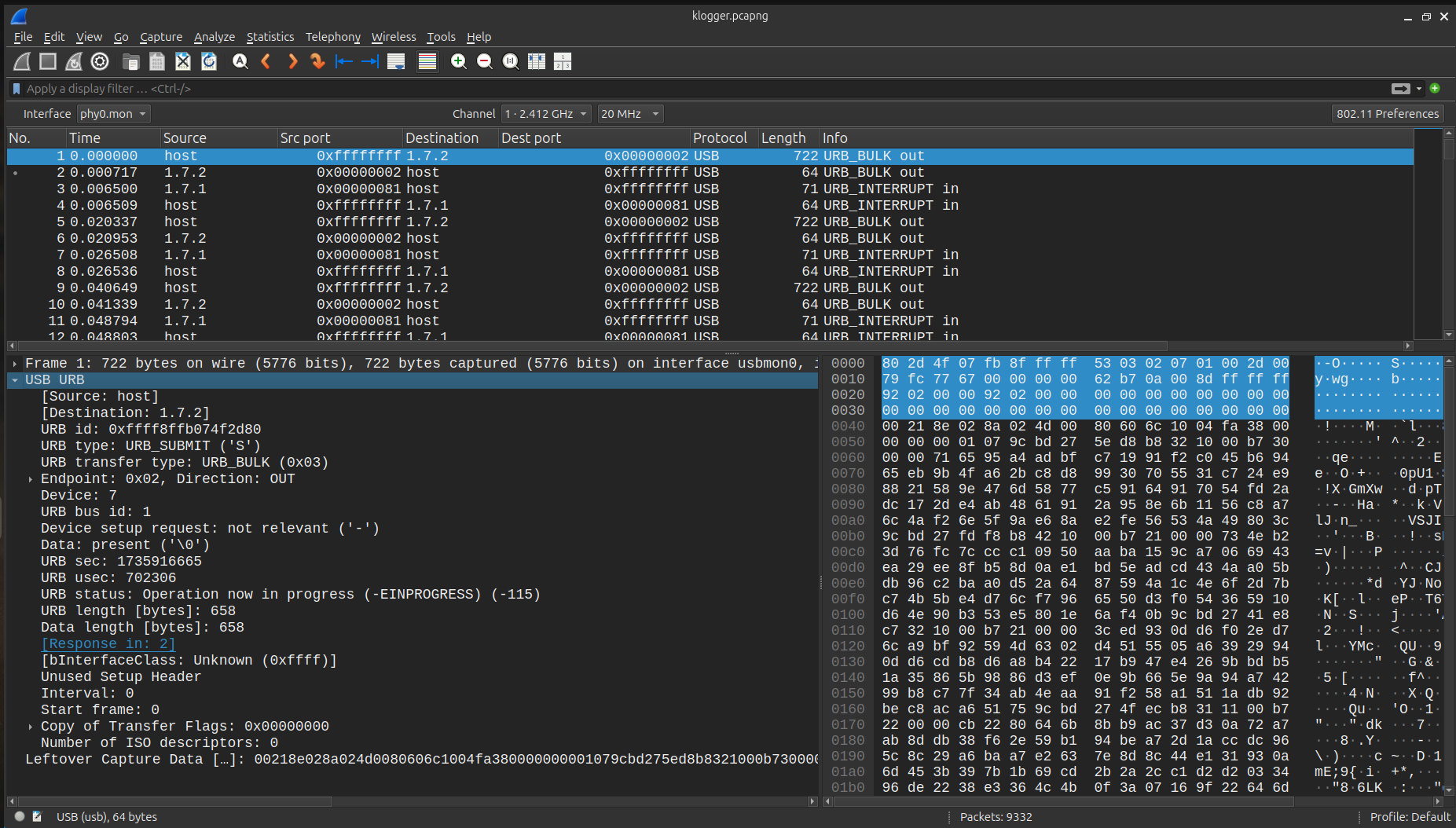

To analyze the user’s activity, we check the flow of USB IN packets (packets sent from the device to the host). These are typically user activities.

Filtering the USB IN Packets

To narrow down the USB IN packets, I applied the following filter in Wireshark:

usb.transfer_type == 1 && usb.endpoint_address.direction == 1

Here, we can see source IPs such as 1.3.2, 1.7.1, 1.5.1, and 1.5.2.

Extracting USB CAP Data

The USB HID data/CAP data is usually 8 bytes. I started by analyzing the packets from 1.3.2, as they had 8-byte CAP data.

To extract the USB capture data, I used the tshark CLI.

1 | $ tshark -r klogger.pcapng -Y "usb.transfer_type == 1 && usb.endpoint_address.direction == 1 && usb.src==1.3.2" -T fields -e usb.capdata > data_1.3.2.txt |

Parsing USB CAP Data

For parsing the USB capture data into readable data, I used the following script:

1 | import sys |

1 | $ python3 usbkeyboard.py data_1.3.2.txt |

However, the data retrieved from 1.3.2 was garbage. I then moved on to another source IP (1.5.1), which also had 8-byte CAP data, and repeated the tshark steps.

1 | $ python3 usbkeyboard.py data_1.5.1.txt |

Creating the Flag

After extracting the data, I cleaned up the received flag and formatted it properly as per the challenge’s defined format.

Flag:

1 | irisctf{this_keylogger_is_too_hard_to_use} |

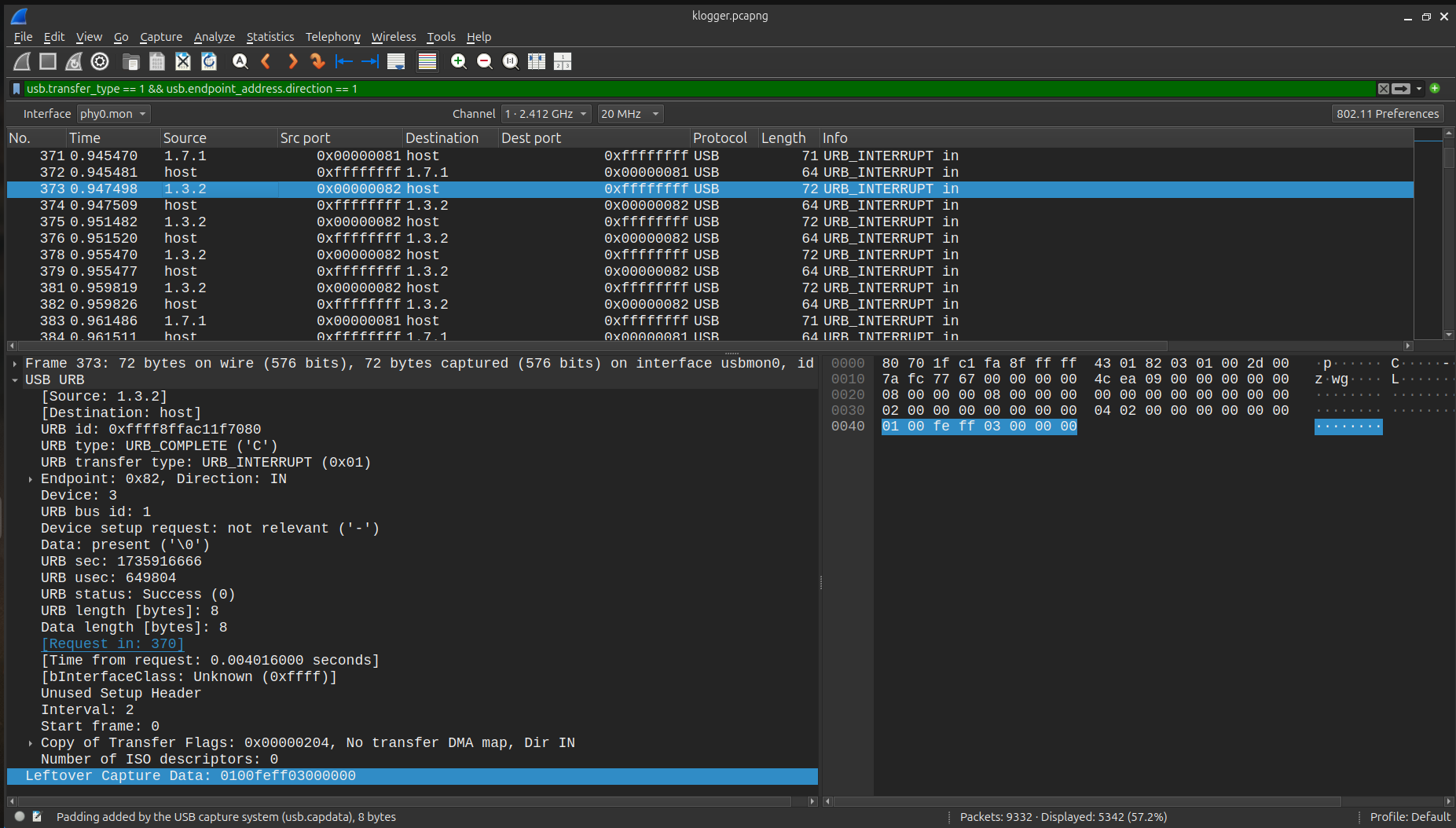

Windy Day

Challenge Description

Solution

We received a memory dump for this challenge. The title, Windy Day, hints towards Windows OS, and the description, lost track of an important note..., suggests we may need to retrieve some crucial information from the memory.

Initial Analysis

I started analyzing the memory dump using Volatility3.

1 | $ python3 vol.py -f memdump.mem windows.info |

As usual, I first listed all the processes.

1 | $ python3 vol.py -f memdump.mem windows.pslist |

Among them, I noticed multiple firefox.exe processes running. I decided to dump all the files of the Firefox process with PID 3036:

1 | $ python3 vol.py -f memdump.mem windows.dumpfiles --pid 3036 |

This command yielded some DLLs and database files such as .db and .sqlite.

1 | permissions.sqlite favicons.sqlite cookies.sqlite places.sqlite formhistory.sqlite storage.sqlite cert9.db key4.db |

Exploring the Databases

I analyzed these database files using DB Browser.

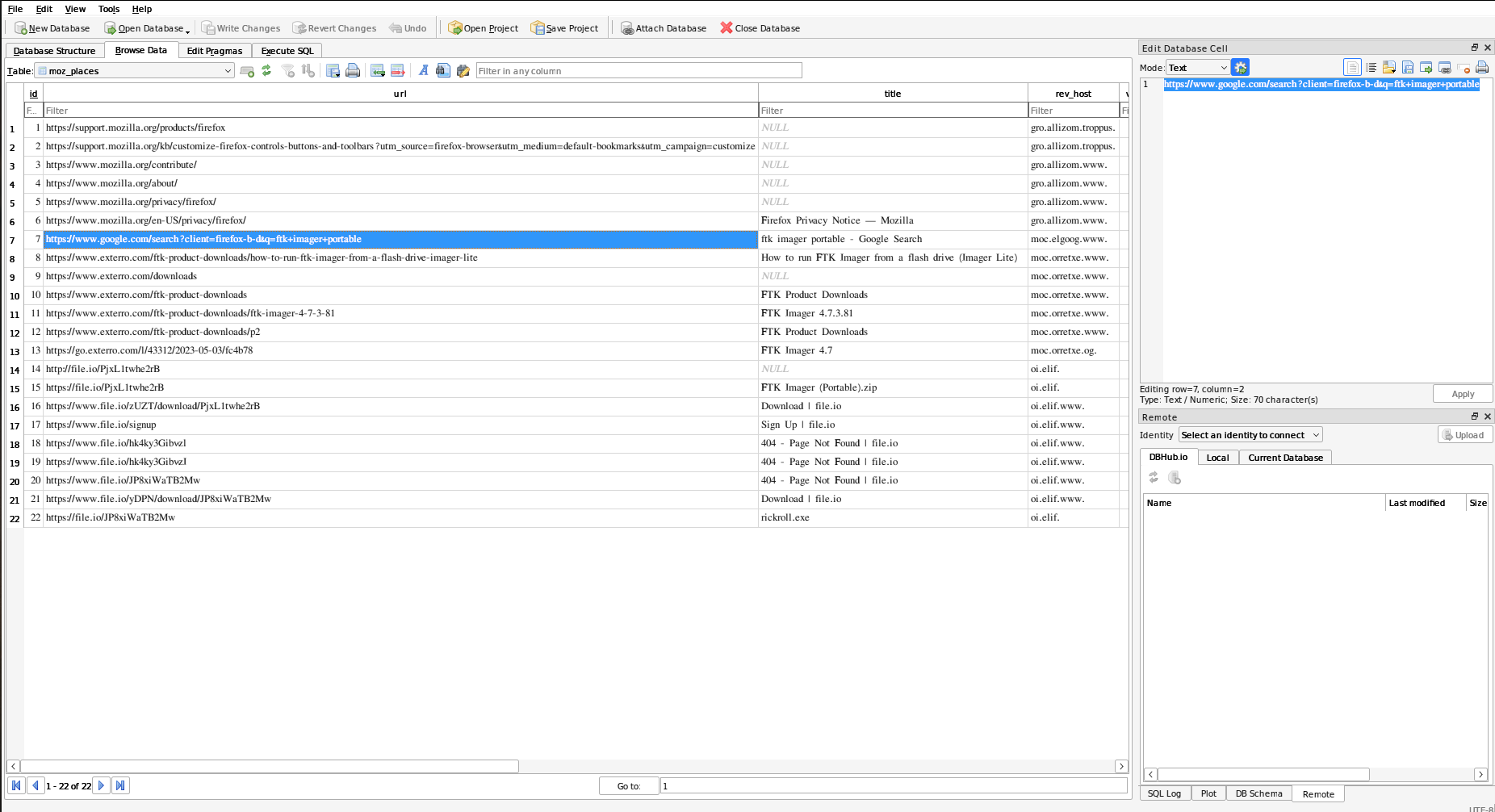

The places.sqlite and cookies.sqlite files contained search history data. Below is a snapshot of the data from places.sqlite:

Initially, I suspected that the flag might have been uploaded to a file-sharing site like file.io. I explored the file.io site links but found no useful results.

Further Investigation

Next, I dumped the memory of the firefox.exe process for deeper analysis:

1 | $ python3 vol.py -f memdump.mem windows.memmap --pid 3036 --dump |

This generated a pid.3036.dmp file. I ran strings on this file and noticed several links, which led me to Google Sites.

1 | $ strings pid.3036.dmp | grep "https://www.google.com/search?" |

Among the search queries, one appeared to be encoded in Base64.

Decoding the Base64 String

I decoded the Base64 string using the following command:

1 | $ echo "aXJpc2N0ZntpX2FtX2FuX2lkaW90X3dpdGhfYmFkX21lbW9yeX0=" | base64 -d |

Boom! We got our flag!!!

Flag:

1 | irisctf{i_am_an_idiot_with_bad_memory} |

Alternate Approach

Later, I realized the same result could have been achieved by directly running strings with base64 of “irisctf{“ on the main memdump.mem file, which would have saved some effort. 😛

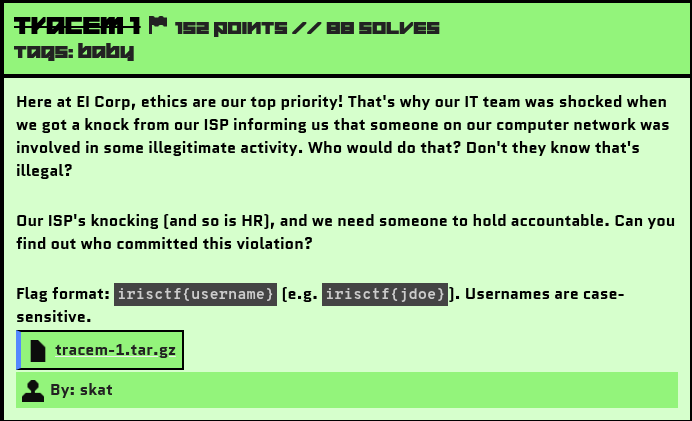

Tracem1

Challenge Description

Source File: tracem-1.tar.gz

Solution

For this challenge, we need to identify the culprit. We were provided with a logs.json file packed with DHCP, DNS, Active Directory, and syslog logs.

- The DHCP Logs show the assignment of IP addresses.

- The DNS Logs contain records of IP address resolutions for websites.

- The Active Directory Logs include the names of the employees within the company.

- The Syslog Logs are primarily focused on authentication and also contain the names of the employees.

Let’s analyze the file:

1 | {"host": "primary", "source": "stream:dns", "sourcetype": "stream:dns", "_time": "2024-12-04 06:30:39.50", "data": {"timestamp": "2024-12-04 06:30:38.816111", "protocol_stack": "ip:udp:dns", "transport": "udp", "src_ip": "10.49.0.2", "src_port": 53, "dest_ip": "10.49.81.65", "dest_port": 2561, "transaction_id": 54938, "answers": [{"type": "AAAA", "class": "IN", "name": "dropbox.com", "addr": "2620:100:6040:18::a27d:f812"}]}} |

We can see references to many links accessed by different employees, along with their IP addresses and MAC addresses.

Since the challenge was related to computer networks, I started by checking all the URLs accessed by the employees.

1 | $ grep -o '"name": *"[^"]*"' logs.json | sed -E 's/"name": *"([^"]*)"/\1/' |

So these were the urls:

1 | connectivitycheck.gstatic.com |

There were some safe sites, as well as sites related to the evil-insurance (EI) Corp Company.

Out of all the sites, I found the following to be very suspicious:

1 | copious-amounts-of-illicit-substances-marketplace.com |

Since these sites were also matching the keywords mentioned in the description, I proceeded to check who accessed both of these sites.

1 | $strings logs.json | grep -E "copious-amounts-of-illicit-substances-marketplace.com|breachforums.st" |

As expected, the same IP address (10.33.18.209) accessed both of these sites.

Let’s analyze the syslog:

1 | {"host": "primary", "source": "udp:514", "sourcetype": "syslog", "_time": "2024-12-04 04:58:36.95", "data": {"_raw": "2024-12-04 04:58:35.622504||https://sso.evil-insurance.corp/idp/profile/SAML2/Redirect/SSO|/idp/profile/SAML2/Redirect/SSO|5b52053ac1ab1f4935a3d7d6c6aa4ff0|authn/MFA|10.33.18.209|Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3 Edge/16.16299|https://sso.evil-insurance.corp/ns/profiles/saml2/sso/browser|llloyd||uid|service.evil-insurance.corp|https://sso.evil-insurance.corp/idp/sso|url:oasis:names:tc:SAML:2.0:protocol|urn:oasis:names:tc:SAML:2.0:bindings:HTTP-Redirect|urn:oasis:names:tc:SAML:2.0:bindings:HTTP-POST|kzYQV+Jk2w3KkwmRjR+HK4QWVQ3qzLPLgA5klV2b8bQT+NLYLeqCZw5xUGKbx1U1158jlnUYRrILtVTtMkMdbA==|urn:oasis:names:tc:SAML:2.0:nameid-format:transient|_60b0fd4b0ed5bba3474faeb85b3944e|2024-12-04 04:58:35.622504|_c4b56d58-625b-49aa-b859-4a2068422979||||urn:oasis:names:tc:SAML:2.0:status:Success|||false|false|true", "timestamp": "2024-12-04 04:58:35.622504", "NLYLeqCZw5xUGKbx1U1158jlnUYRrILtVTtMkMdbA": "=|urn:oasis:names:tc:SAML:2.0:nameid-format:transient|_60b0fd4b0ed5bba3474faeb85b3944e|2024-12-04"}} |

I then checked the syslog for this IP address and found the name of the employee associated with it: llloyd.

Creating the Flag

With the gathered information, I constructed the flag using the defined format, and boom, that was the correct flag.

Flag:

1 | irisctf{llloyd} |

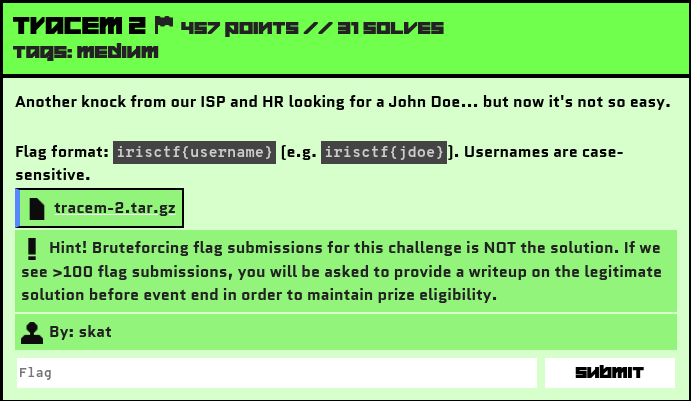

Tracem2

Challenge Description

Source File: tracem-2.tar.gz

Yeah some of the teams had 600+ and 1300+ attempts.😂

Solution

This challenge is a continuation of the Tracem1 challenge. For better context, you can refer to my Tracem1 write-up. Unfortunately, I was only able to solve this challenge partially during the CTF duration 🥹. I delved too deeply into analysis and missed a critical clue. However, I’ll share all the data and steps I took while solving it.

Challenge Overview

We received a logs.json file for this challenge. Similar to Tracem1, I began analyzing the logs.

Here are some notable sites accessed in the logs.json file:

1 | detectportal.firefox.com |

These two sites seemed suspicious:

- thepiratebay.org

- generic-illicit-activities-hub.org

I then identified the user who accessed both sites:

1 | $ strings logs.json | grep -E "thepiratebay.org|generic-illicit-activities-hub.org" |

Suspicious User Identification

The suspicious user had the following attributes:

1 | IP Address: 10.18.21.121 |

Activity Timeline of the Suspicious User

| Event | Time of the event |

|---|---|

| Req for the addr 10.18.21.121 | 2024-12-04 08:49:20.52 |

| Get assigned that addr | 2024-12-04 08:49:21.00 |

| Access www.google.com | 2024-12-04 08:49:34.58 |

| Access 2.arch.pool.ntp.org | 2024-12-04 08:51:18.72 |

| Access thepiratebay.org | 2024-12-04 08:51:51.12 |

| Access generic-illicit-activities-hub.org | 2024-12-04 09:05:01.12 |

| Releases the IP addr | 2024-12-04 09:22:01.12 |

This was another challenge in the Tracem series, so it wasn’t going to be straightforward to solve via syslog logs. Additionally, the suspicious IP (10.18.21.121) didn’t have any corresponding syslog logs, making direct identification of the user more difficult.

Since I had already established that the suspicious IP (10.18.21.121) was the only one to access the two suspicious sites (thepiratebay.org and generic-illicit-activities-hub.org), I decided to investigate further.

To narrow down potential leads, I began analyzing who else accessed 2.arch.pool.ntp.org.

I got these result:

1 | 10.50.103.29 |

Initial Misstep

At this point, I noticed that the user accessed 2.arch.pool.ntp.org, a relatively common URL. I assumed it wasn’t suspicious and did not cross-reference other IPs that accessed this site during the same timeframe. This was my critical oversight.

Feel free to skip the rest if you’d like—I’ll now share what I explored after this step.

Investigation Details

Extraction and Analysis

- MAC Addresses:

I extracted all MAC addresses to look for other spoofed addresses but found no results.

- IP Segmentation:

I identified recurring IP ranges in the logs:

1 | 10.17.X.X |

- Syslog SSO Logs:

Only the following IP ranges appeared in syslog SSO logs:

1 | 10.17.X.X |

So it was certain that I would get the username from this set of IPs.

Looking at these IPs, I thought this challenge might be based on Network Segmentation. It appeared that there were four buildings and three subnets, which Skat later confirmed on Discord.

- Network Proximity Assumptions:

I hypothesized that the suspicious user might have moved laterally to a new subnet, maintaining the same host/octet structure.

From 10.17.X.X and 10.19.X.X:

- Likely Subnets: 10.17.21.xxx, 10.17.121.xxx, etc.

- Likely Hosts: 10.17.x.121.

Despite exploring these, I found no leads.

Then I moved on to checking the full logs.json file around the timeline of the IP guy. By this time, this challenge was my last one left, and I was very tired, so I couldn’t search properly.

Aftermath

After the CTF Competition, Jakob (discord) mentioned that I should have checked the IPs accessing 2.arch.pool.ntp.org around the timeline of the suspicious user (10.18.21.121).😿

Key Table (from Jakob’s Write-Up):

| Timestamp | Events | Notes |

|---|---|---|

| 2024-12-04 08:45:33.555166 | 10.17.161.10 ( mhammond ) disconnects from network | releases 10.17.161.10 + pause in traffic from 10.17.161.10 |

| 2024-12-04 09:42:00.907257 | IP assignment to 10.17.161.10 ( mhammond ) | traffic from 10.17.161.10 continues |

This IP (10.17.161.10) was the closest to the suspicious user’s timeline. The username associated with this IP was mhammond, making them the culprit.

Flag:

1 | irisctf{mhammond} |

Reflection

This challenge reinforced the importance of checking all data points and avoiding assumptions during investigations. Despite missing the critical clue during the competition, I gained valuable insights and improved my analysis techniques.

Thanks skat for the cool challenges.